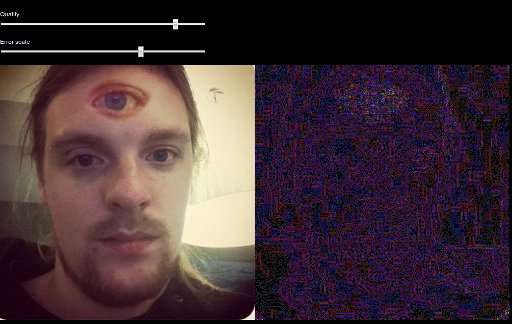

C2PA Content Credential Photo Forensics 12 Apr 2:07 AM (6 months ago)

C2PA Photo Forensics in Forensically

C2PA Photo Forensics in Forensically

I have added support for reading C2PA metadata to my photo forensics tool Forensically. C2PA is the Coalition for Content Provenance and Authenticity. In their own words:

The Coalition for Content Provenance and Authenticity (C2PA) addresses the prevalence of misleading information online through the development of technical standards for certifying the source and history (or provenance) of media content.

The C2PA metadata is signed and optionally timestamped using a timestamp authority.

Cryptography & PKI

It’s important to note that digital signatures do not inherently guarantee trustworthiness. Even if the scheme is cryptographically sound you still need to trust everyone and everything with access to the keys.

Sadly the C2PA Javascript library doesn’t reveal a lot of useful information, essentially just the Organization of the Subject from what I can tell.

"signatureInfo": {

"alg": "Es256",

"issuer": "C2PA Test Signing Cert",

"cert_serial_number": "640229841392226413189608867977836244731148734950",

"time": "2025-04-06T20:32:57+00:00"

}

Neither does contentcredentials.org/verify.

There is a “known certificate list” and an email address to send in your certificate to be included. I’m not exactly sure what information is validated before a certificate is included.

As is, I’d take the signatures displayed in Forensically with a big pinch of salt.

Privacy and Tracking Concerns

C2PA includes support for remote manifests.

There are definitely use cases where having remote manifests makes sense, for instance to keep the file size of an asset small. They can however be used as beacons to track a person viewing the metadata.

For Forensically I have decided to disable support for remote manifests, both using configuration options and by blocking requests using a Content Security Policy.

File Sizes

Another little headache while implementing this was the size of the c2pa-js library. Using my Vite setup it compiles down to about 350 kB for the main ES module, 165 kB for a worker and 5.4 MB for the WebAssembly module.

To mitigate the impact of this I’m lazy loading the library only when needed. This brought up another issue, how do I figure out whether an image contains C2PA data without loading all of that code?

There is the @contentauth/detector package for that but it also weighs about 70 kB of WASM and JavaScript.

Ultimately, I wrote my own detection code. Luckily the pattern to be searched for doesn’t repeat the initial byte anywhere. So searching for it can be done efficiently using a simplified version of Knuth-Morris-Pratt.

My JavaScript implementation weighs about 300 bytes after minification.

Impulse Response Creator 23 Mar 8:51 AM (7 months ago)

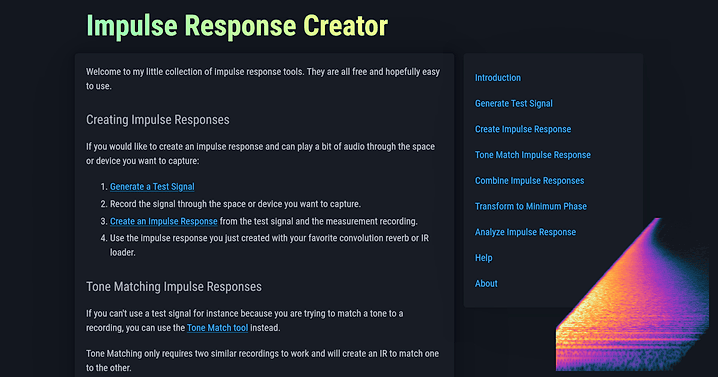

I got a little bit obsessed with convolution reverb and measuring impulse responses over the last few weeks. As part of that I’ve built myself a few command line tools to create and process impulse responses. I have now bundled these up into a web application, in the hope that they may be useful to others.

What are impulse responses and convolution reverbs

If you want to evaluate the acoustics of a room you are in, you can clap your hands and listen to the echos and reverberation of the clap. An impulse response is very similar to that. It’s the response of a system to being excited with a short impulse like the clap in the example before.

The impulse response can then be used to simulate the sound of the room using a process called convolution. An easy way to think of this is that for every point on the input signal the impulse response is played back with the volume (and polarity) adjusted to match the input. This cascade of echos will then match what that input would have sounded like in the room.

This principle isn’t limited to rooms, speaker cabinets, or audio. It works for any linear time-invariant sysyem.

Capturing Impulse Responses

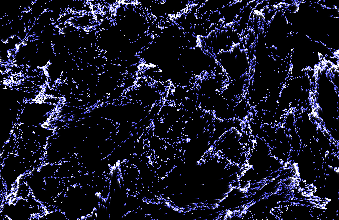

Spectrogram of the Impulse Response of a room.

Spectrogram of the Impulse Response of a room.

In practice capturing accurate impulse responses is a bit more difficult than just clapping. Ideally the impulse used to excite the system is very brief. Infinitesimally brief. In order to get a good signal to noise ratio it should also be very loud, to get as much energy as possible into the system with that brief pulse.

The impulse generated by clapping hands isn’t very short, very intense, or easily reproducible. Better options are popping balloons or firing starter guns. Those methods are better than clapping but still far from ideal.

Can we avoid using an impulse to capture an impulse response?

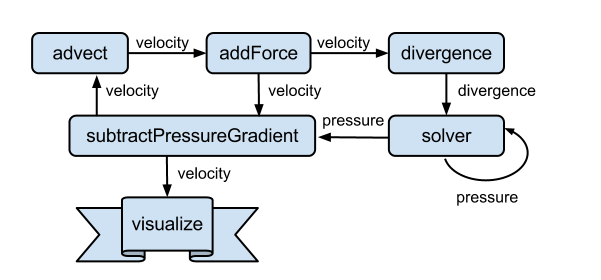

Sweeping Sines

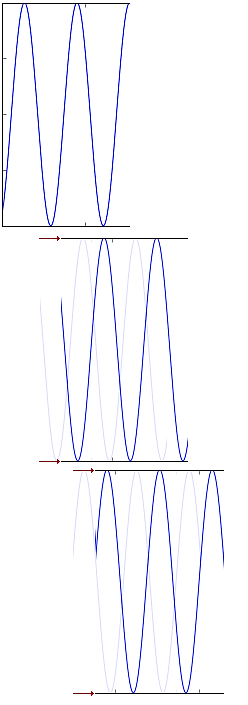

If we look at the problem from a frequency perspective an alternative approach becomes evident.

An ideal impulse is a short burst containing all frequencies at once. Instead of sending out all frequencies at once, we can send out different frequencies over time. To do so we can use a simple sine wave with a changing frequency over time. This test signal is also known as a sine sweep or chirp.

Now instead of an infinitesimally short pulse we can take as much time as we would like to send out our signal. This makes it easier to get a good signal to noise ratio and is much kinder to the equipment being measured.

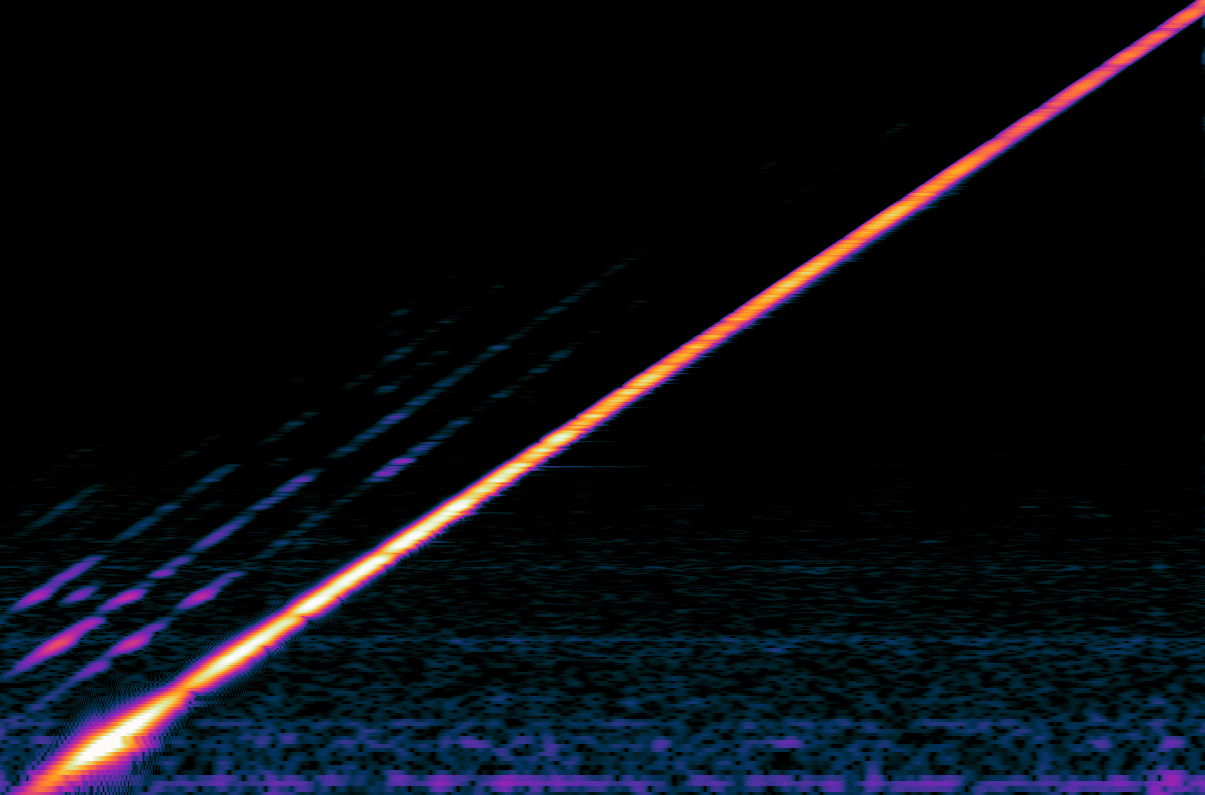

Spectrogram of a sine sweep.

Spectrogram of a sine sweep.

|

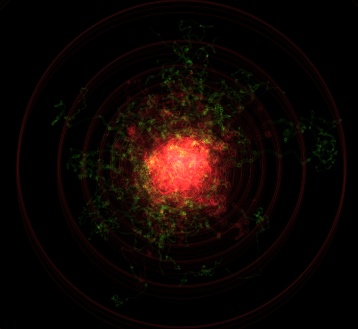

Response to the sine sweep.

Response to the sine sweep.

|

Note: The parallel lines above the sine sweep are due to harmonic distortion introduced in my measurement process. The horizontal noise are due to hum while measuring. Turns out getting good measurements in the real world is hard. But we can deal with those artifacts.

Deconvolution

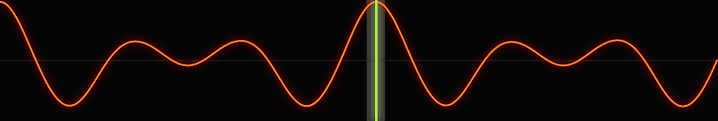

There is just a tiny little problem, the response to our sine sweep isn’t a crisp impulse response instead it’s smeared out over time just like our test signal is. In order to get the impulse response we need to undo this smearing over time. This process is called deconvolution and it transforms the response to the sine sweep into an impulse response.

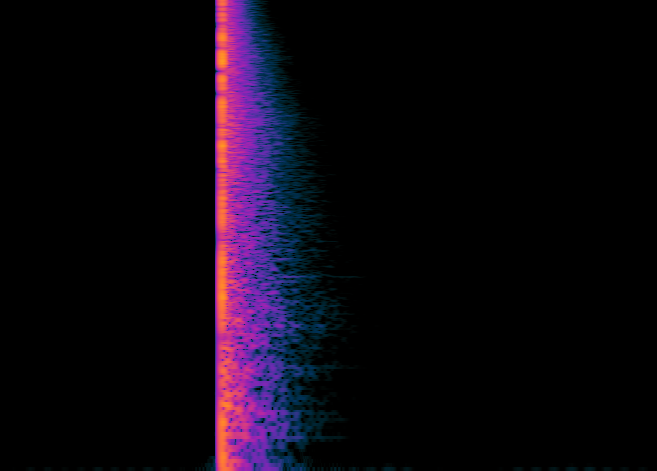

Response to the sine sweep after deconvolution.

Response to the sine sweep after deconvolution.

Looking at the spectrogram in visual context, this boils down to shearing.

My actual implementation is based on Farina, Angelo. (2000). Simultaneous Measurement of Impulse Response and Distortion With a Swept-Sine Technique. or Wiener Deconvolution depending on the test signal and selected options. The technique by Farina also gets rid of the harmonic distortion.

If this explanation was a bit too handwavy, I suggest reading the paper by Angelo Farina. He strikes a good balance between detail and accessiblity.

Web Implementation

After having played around a bit with my CLI implementation I’ve realized that there aren’t (m)any easy to use online tools for creating impulse responses. So I went the extra mile and added a little web ui in the hope that this - admittedly very niche tool, might be useful to others.

All the DSP code for this project is implemented in Rust and compiled to wasm. The UI is written in Typescript and uses React. Everything is bundled up using vite and wasm-pack.

I’ve also written some integration tests using Playwright. Using Playwright was rather nice and low friction so I will likely it again in future projects.

Limitations

While there is a UI and a bit of plumbing for normalizing and trimming the generated impulse responses the core of the code is still what I’ve written for my experiments.

There are still many rough edges and potential for improvement. One obvious area is improved noise reducation, especially with regards to impulse noise) which lead to chirps leaking into the deconvolved impulse response.

Demo

Finally a little demo of what this can sound like. At first the arpeggio is played back without added reverb, then convolved with a measurement my room and finally convolved with the impulse folded back onto itself with some feedback for an ambient effect.

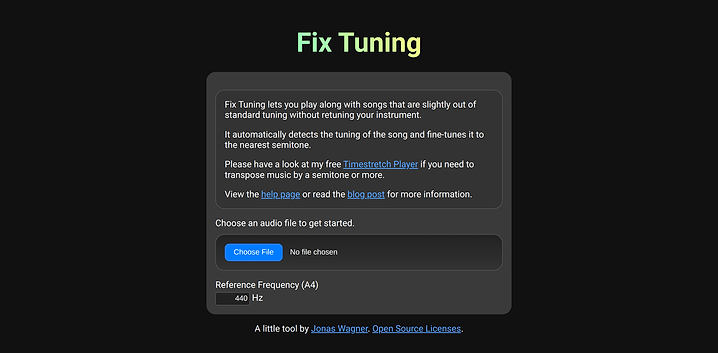

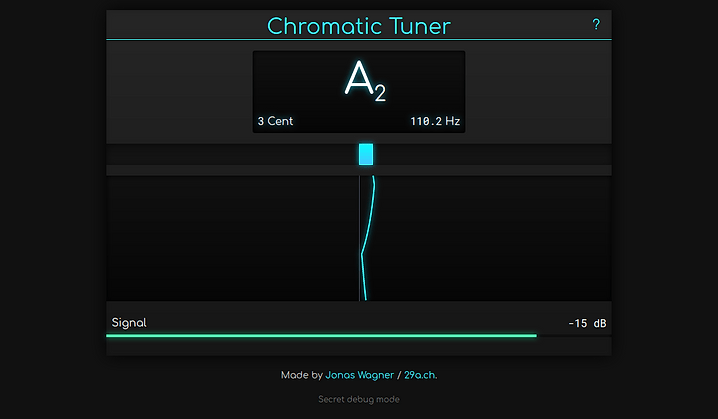

Tuning Songs Instead of My Guitar 17 Nov 2024 10:25 AM (11 months ago)

In 1834 Johann Heinrich Scheibler recommended using 440 Hz as the standard frequency for A4. For various reasons a lot of music deviates from that. Deviating from 440 Hz has its artistic merits and can give music a different feeling and timbre.

It’s an annoyance if you have to retune your instrument slightly for every song you want to play along with.

It is possible to shift the pitch of a piece of music instead, and I have written a pitch shifter called Timestretch Player to do just that. But finding the exact pitch offset is still tedious.

So being fed up with retuning my guitar to play along with some song supposedly tuned to match the pitch of an anvil being struck with a hammer in the intro I decided to build myself a little tool to automate the process.

Fix Tuning automatically detects the tuning offset from a reference pitch (usually 440 Hz) in cents (¹⁄₁₀₀ of a semitone) and corrects it by slightly changing the playback speed.

Implementation

The pitch offset detection works by detecting the fundamental frequency of harmonic signals in the audio file. It then calculates their delta from the closest note in the 12 tone equal temperament scale. Finally it calculates the pitch offset that will minimize the deltas.

The pitch-detection approach is loosely inspired by Noll, A. M. (1970). Pitch determination of human speech by the harmonic product spectrum, the harmonic sum spectrum and a maximum likelihood estimate.

Because the changes in pitch required to correct the offset are fairly small, I’ve opted to correct them by changing the playback speed rather than using a more advanced pitch-shifting method. In my opinion, the 3% difference in speed required to correct an offset of half a semitone is less noticeable than artifacts introduced by changing the pitch independently of the tempo. For high-quality resampling I’m using the rubato crate.

The DSP code is written in Rust and compiled to WebAssembly, while the web ui is built with plain typescript wired up using vite and wasm-pack.

Limitations

The pitch detection assumes a 12 tone equal temperament scale and doesn’t account for key or tonal center. It simply tunes the audio to align most closely with a 12 tone scale based on the reference frequency.

While the pitch detection is fairly robust due to averaging over the entire song, it will still struggle with music that is mostly atonal.

Most testing has been done with a small number of songs and short synthetic snippets. For the songs I’ve established the ground truth by ear. Hopefully good enough for quick validation, not good enough for fine tuning.

Lastly the web audio api used to decode the files resamples the decoded audio to sample rate it is internally using. This isn’t ideal but I think negligible in practice.

Why no AI?

Machine learning or “ai” would certainly lend itself to this problem. Already back in 2018 CREPE has shown that convolutional neural networks are well suited for pitch estimation. End-to-End Musical Key Estimation Using a Convolutional Neural Network shows that estimating the tonal center is possible as well. Estimating the tonal center would be fairly difficult with my current approach as it involves a lot of cultural and musical conventions that are difficult to capture in a simple algorithm.

However building up a suitable dataset for this use case, training and testing different architectures is quite a bit of work. Running inference in a web browser also tends to be non-trivial.

So I think given the current scope of the project going with a simple hand coded algorithm was the right call.

Next Steps

It could be interesting to integrate the pitch offset detection into my Timestretch Player. I’m also working on another guitar tuner, there “Tune to Song” could be a nice feature.

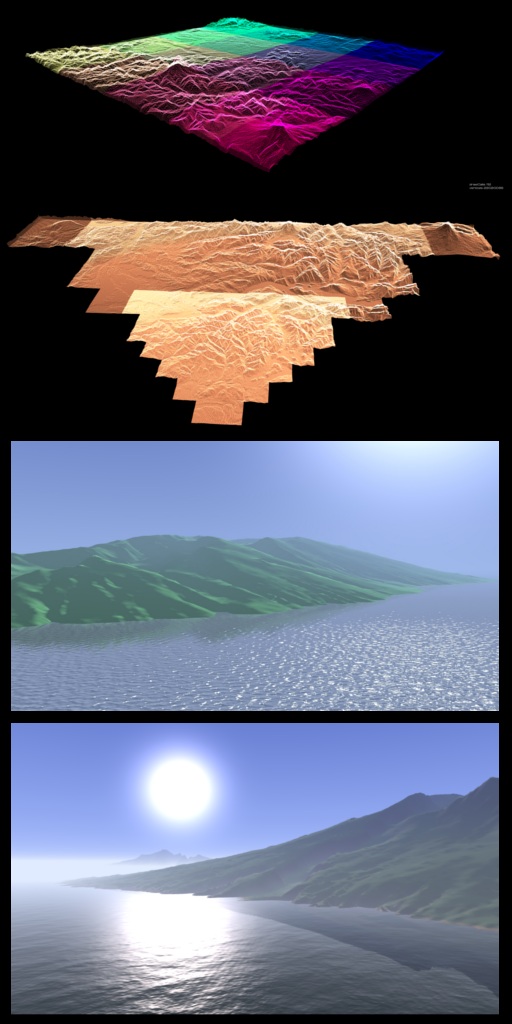

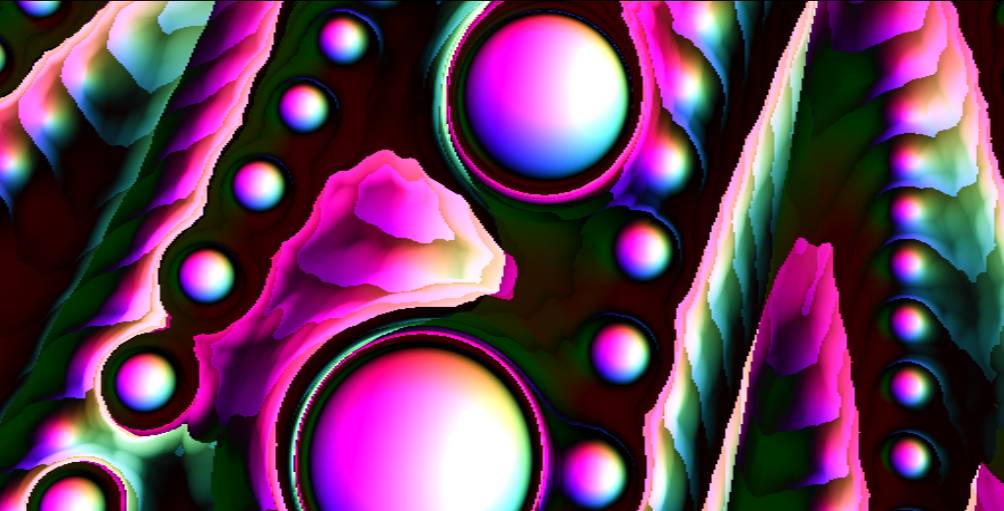

simplex-noise.js 4.0 & Synthwave Demo 23 Jul 2022 3:07 AM (3 years ago)

I’ve just released version 4.0 of simplex-noise.js. Based on user feedback the new version supports tree shaking and cleans up the API a bit. As a nice little bonus, it’s also about 20% - 30% faster.

It also removes the bundled PRNG to focus the library down to one thing - providing smooth noise in multiple dimensions.

The API Change

The following bit of code should illustrate the changes to the API well:

// 3.x forces you to import everything at once

import SimplexNoise from 'simplex-noise';

const simplex = new SimplexNoise();

const value2d = simplex.noise2D(x, y);

// 4.x allows you to import just the functions you need

import { createNoise2D } from 'simplex-noise';

const noise2D = createNoise2D();

const value2d = noise2D(x, y);

Tree shaking

Thew new API enables javascript bundler to perform tree shaking. Essentially dead code removal based on imports and exports.

Tree shaking reduces the size of bundled javascript by leaving out code that isn’t used. As author of a library it enables me to worry less about the bundle size impact of features that might not be used by the majority of users.

A little demo to celebrate

The release of 4.0 and getting over 1’000 stars on github was a good excuse to write a little demo to celebrate. For some extra fun I’ve decided to constrain myself to code a bit like I did in 2010 again. Canvas 2d only. No WebGL. :)

The performance implications of that decision are pretty terrible but it’s not like the world needs a demo to proof that quads can be rendered more quickly anyways. ;)

The demo uses 2 octaves of 2d noise from simplex-noise.js in a FBM configuration. The noise is then modulated with a few sine waves to create a twisting road, mountains and more flat plains.

At the highest resolutions the demo is pushing 4096 quads/frame! A bit more than the SuperFX Chip on SNES could handle. ;)

If you are interested in more details the code is available on github.

The future

With tree shaking reducing the impact of rarely used code it could be fun to add a few more features to simplex-noise.js in the future. A few ideas that come to mind are:

- Noise with a controllable period (aka tileable noise)

- Noise in 1D

- More random noise using a (better) hash function like xxhash

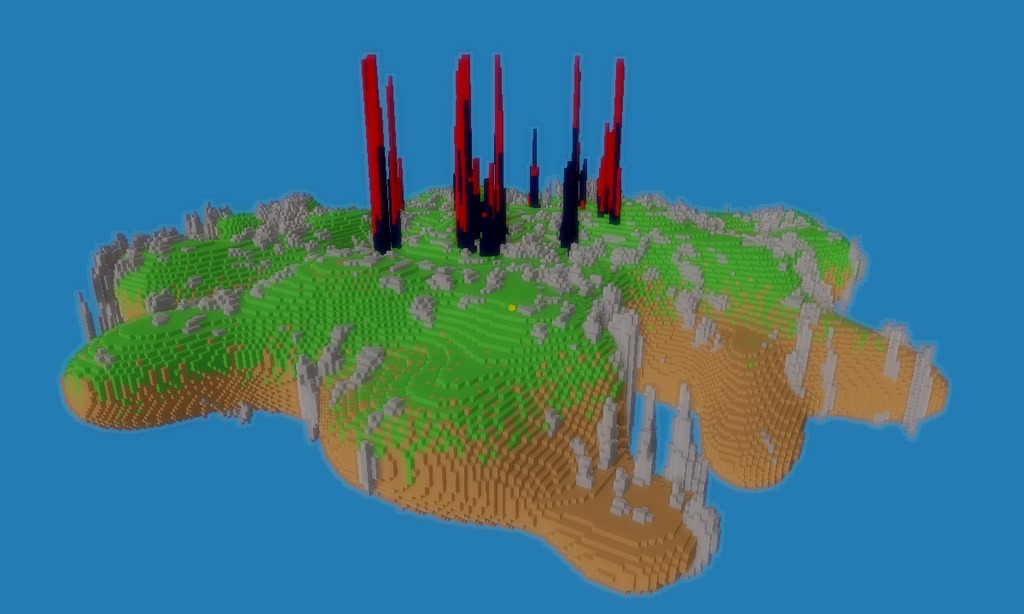

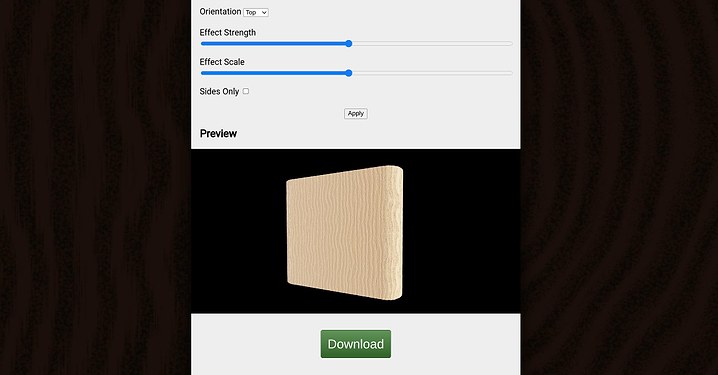

Tool to apply wood textures to 3d prints 23 Oct 2021 10:57 AM (3 years ago)

A little while ago I came across an interesting post on reddit on how a wood displacement map can enhance the look of 3D prints.

Just a bit before I also played with the fuzzy skin feature in prusa slicer 2.4. That made me wonder whether the wood effect could be achieved directly in the slicer by tweaking the fuzzy skin feature. I had a quick look at the prusa slicer source and it looked like it would be fairly easy to add.

To prototype the idea and gauge whether there is any interest in it I decided that it would be best to create a little web app using three.js.

For the effect to just work I had to avoid uv maps. Instead I’m using a basic volumetric (3d) procedural wood texture derived from sine waves and a bit of noise.

Preliminary results

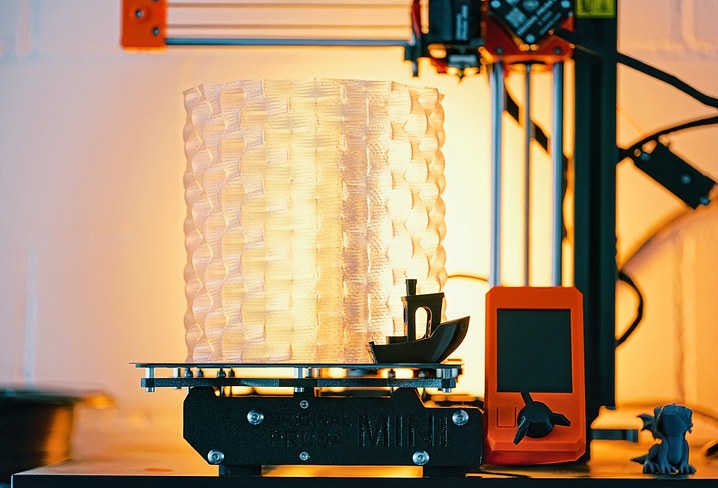

To test the process I designed and printed a very simple phone stand and a 3D benchy using Polymaker PolyWood filament.

Benchy and phone stand on the prusa mini.

Benchy and phone stand on the prusa mini.

The phone stand lit by the sun.

The phone stand lit by the sun.

Known issues

When processing STL files I first tesselate the geometry and then perform the displacement mapping. Luckily there is a simple tesselator built into three.js which I could use. Unfortunately it creates t-juction (when running out of iterations). The displacement mapping can also lead to self intersections.

I also included a process for directly modifying G-code to test how this process could work when integrated into the slicer. When processing g-code I simply look for the perimeter comments inserted by prusa slicer and modify the G1 movement commands. Moves are currently not sub divided.

So the tool is far from perfect but I’m quite happy with the results I got from it so far.

Possible future work

If there is enough interest in texturing features like this I think it would be pretty cool to integrate them directly into the slicer.

Obviously the wood effect isn’t the only pattern that could be achieved with this approach. Playing with different textures could definitely be fun as well.

Swirly Bokeh Lens Hood - 3D Printed 19 Sep 2021 4:42 AM (4 years ago)

Photo taken with the 3d printed swirly lens hood.

Photo taken with the 3d printed swirly lens hood.

I enjoy the swirly blur in the out of focus regions that certain lenses like the historic Petzval produce. This effect is also known is swirly bokeh.

Just not quite enough to own such a lens myself (yet). Instead I’ve chosen to emulate it by 3d printing a special lens hood for my Sony FE 55/1.8 lens.

How to get swirly bokeh with a lens hood

A similar effect to the one produced by these old lenses can be achieved using a lenshood that restricts the paths light can take into the lens. The swirly effect is essentially created by the opening of the lens hood being to small. This creates mechanical vignetting (also known as cat’s eye bokeh), blocking part of the light from hitting the sensor.

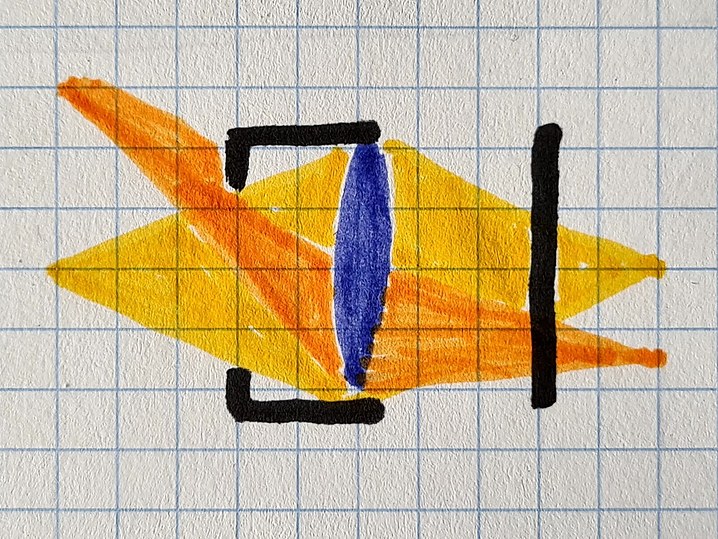

The light coming from the left (yellow, orange) is partially blocked by the lens hood (black) before hitting the lens (blue) and finally the sensor on the right (black).

The light coming from the left (yellow, orange) is partially blocked by the lens hood (black) before hitting the lens (blue) and finally the sensor on the right (black).

In most lenses that produce this effect the vignetting happens somewhere inside the lens but the effect it has is similar.

3D printing a swirly bokeh lens hood

To test out how well this works in practice I modelled such a restrictive lens hood in freecad.

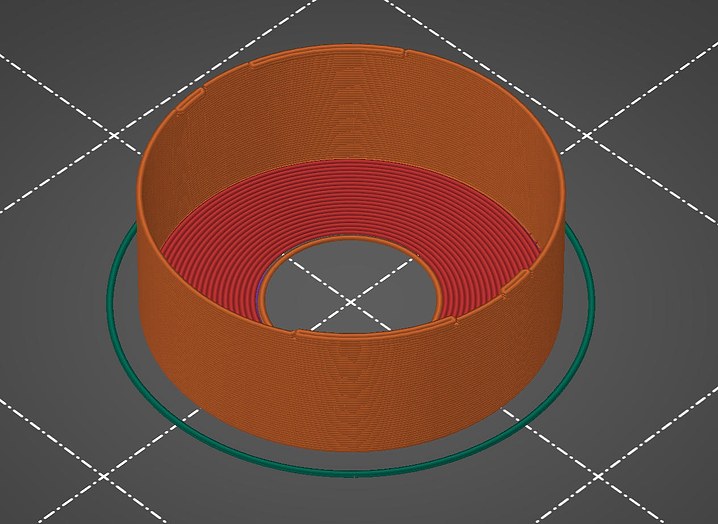

Preview of the 3D print.

Preview of the 3D print.

Finished product.

Finished product.

Definitely not the cleanest print but at a material cost of about 20 cents (EU/US) and a print time of about 15 minutes it’s well worth it.

Results

Crop of the top right corner of some city lights at night.

Crop of the top right corner of some city lights at night.

Want to make your own?

I’ve thrown the source files up in a github repo for you to use jwagner/swirly-lens-hoods. With that said, I’m a very inexperienced CAD user and in this case didn’t even try to create something clean. If you can you might be better of just remodelling it to fit your own lens rather than trying to adapt it.

Procedural Lamp Shades for 3D Printing 22 Aug 2021 12:53 AM (4 years ago)

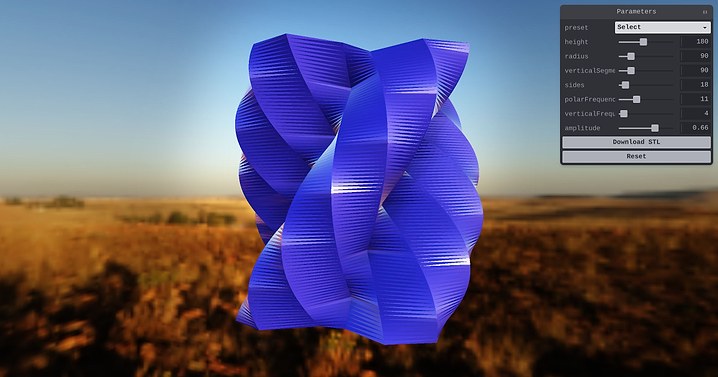

Play with generating lamp shades

Play with generating lamp shades

I didn’t have any lamp shades in my appartment since moving out from my parents place. I’ve decided to change that and have some fun while doing it by building a generator for lamp shades.

How it works

In order to test my setup with three.js.

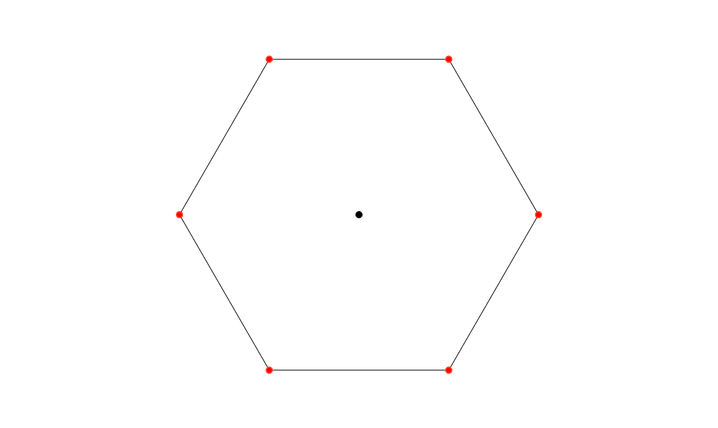

I’ve started by generating n-gons. A hexagon in this example.

In order to make the result a bit more visually appealing I then modulated the distance from the center (radius) of each vertex using a sine wave. This results in some interesting shapes due to aliasing.

To move into the third dimension I extrude the shape and shift the phase of the sine wave a bit in order to twist the resulting shape.

3D Printed results

Here is what one of the models looks like in the real world. 3D printed in vase mode (as a single spiral of extruded plastic) out of PETG using a 0.8mm nozzle.

I made myself a guitar tuner 20 Apr 2020 3:58 PM (5 years ago)

I first learned about the fourier transform at about the same time I started to play guitar. So obviously the first idea that came to my mind at that time was to build a tuner to tune my new guitar. While I eventually got it to work the accuracy was terrible so it never ended up seeing the light of the day.

Fast forward a bit over a decade. It’s 2020 and we are fighting a global pandemic using social distancing. I obviously tried to find ways to directly address the issue with code but in the end there is only so much that can be done on that front and a lot of really clever people on it already.

So rather than coming up with another well intended but flawed design of a mechanical ventilator I decided to revisit this old project of mine. :)

So what’s in it?

The tuner has been built with a whole lot of web tech like getUserMedia to access the microphone, WebAudio to get access to the audio data from the microphone as well as web workers to make it a bit faster. Framework wise I used React and TypeScript.

With that out of the way, the rest of the article will focus on the algorithm that makes the whole thing tick.

Disclaimer

In the following sections I will oversimplify a lot of things for the sake of accessibility and brevity.

If you already have a solid understanding of subject please excuse my oversimplifications.

If you don’t keep in mind that there is a lot more to learn and understand than what I will touch on in this description.

If you want to go deeper I highly recommend reading the papers by Philip McLeod et al. mentioned at the end. They formed the basis of this tuner.

Going beyond the fourier transform

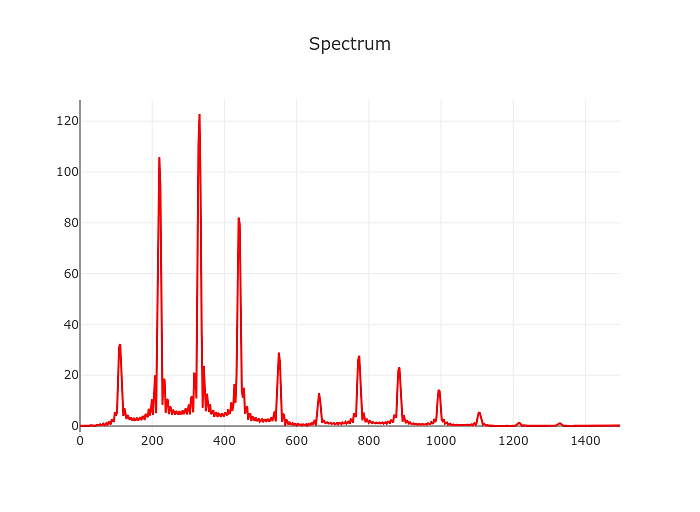

Initially I decided to resume this project from where I stopped years ago, by doing a straight fourier transform on the input and then selecting the first significant peak (by magnitude).

Not quite up to speed with the fourier transform? But what is the Fourier Transform? A visual introduction. is a video beautifully illustrating it.

The naive spectrum approach of course still works as badly as it did back then. In slightly oversimplified terms the frequency resolution of the discrete short-time fourier transform is sample rate divided by window size.

So taking a realistic sample rate of 48000 Hz and a (comparably large) window size of 8192 samples we arrive at a frequency resolution of about 6 Hz.

The low E of a guitar in standard tuning is at ~82 Hz. Add 6 Hz and you are already past F.

We need at least 10x that to build something resembling a tuner. In practice we should aim for a resolution of approximately 1 cent or about 100x the resolution we’d get from the straight fourier transform aproach.

There are approaches to improve the accuracy of this approach a bit, in fact we’ll meet one of them a bit later on in a different context. For now let’s focus on something a bit simpler.

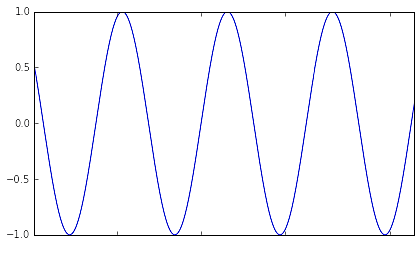

Auto correlation

Compared to the fourier transform autocorrelation is fairly simple to explain. In essence it’s a measure of how similar a signal is to a shifted version of itself. This nicely reflect the frequency, or rather period of the signal we are trying to determine.

In a bit more concrete terms it’s the product of the signal and a time shifted version of the signal. In simplistic Javascript that could look a bit like this:

function autoCorrelation(signal) {

const output = [];

for(let lag = 0; lag < signal.length; lag++) {

for(let i = 0; i + lag < signal.length; i++) {

output[lag] += signal[i]*signal[i+lag]

}

}

return output;

}

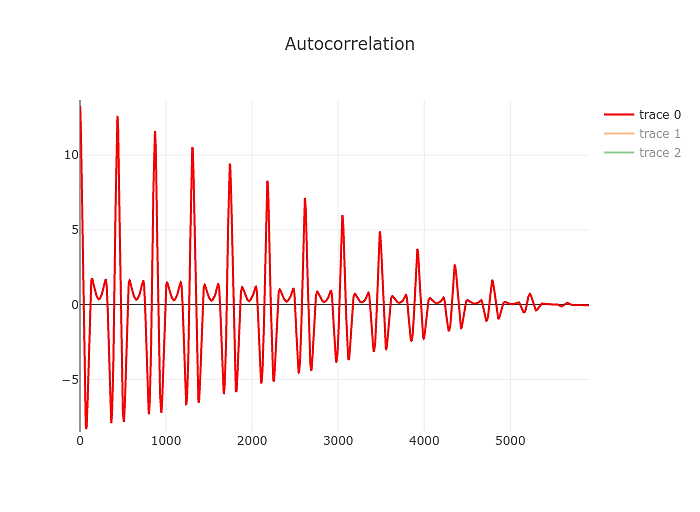

The result will look a bit like this:

Just by eyeballing it you can tell that it’s going to be easier to find the first significant of the autocorrelation compared to the spectrum yielded by the fourier transform above. Our resolution also changed a bit, this time we are measuring the period of the signal. Our resolution is limited by the sample rate of the signal. So to take the example above the period of a 82 Hz signal is 48000/82 or 585 samples. Being off by a sample we’d end up at 82.19 Hz. Not great but at least it’s still an E. At higher frequencies things will start to look different of course but for our purposes that’s a good point to start.

The actual algorithm used in the tuner is based on McLeod, Philip & Wyvill, Geoff. (2005). A smarter way to find pitch. but the straight autocorrelation above is enough to understand what’s going on.

Picking a peak

Now that we have the graph above we’ll need a robust way of determining the first significant peak in it, which hopefully will also be the perceived fundamental frequency of the tone we are analysing.

We’ll do this in two steps, first we will find all the peaks after the initial zero crossing. We can do this by just looping over the signal and keeping track of the highest value we’ve seen and it’s offset. Once the current value drops bellow 0 we can add it to the list of peaks and reset our maximum.

From this list we’ll now pick the first peak which is bigger than the highest peak multiplied by some tolerance factor like 0.9.

At this point we have a basic tuner. It’s not very robust. It’s not very fast or accurate but it should work.

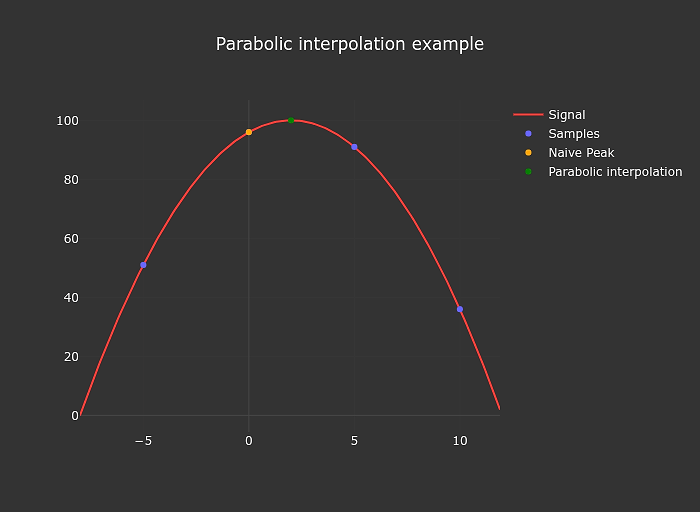

Improving accuracy

The autocorrelation algorithm mentioned above is evaluated at descrete steps matching the samples of the audio input, that limits our accuracy. We can easily improve on this a bit by interpolating. I use parabolic interpolation in my tuner.

The implementation of this is also extremely simple

function parabolicPeakInterpolation(a, b, c) {

const denominator = a - 2 * b + c;

if (denominator === 0) return 0;

return (a - c) / denominator / 2;

}

Improving reliability

So far everything went smoothly. I had a reasonably accurate tuner for as long as I fed it a clean signal (electric guitar straight into a nice interface).

For some reason I also wanted to get this to work using much more dirty signals from something like a smartphone microphone.

At this stage I spend quite a bit of time implementing and evaluating various noise reduction techniques like simple filters and variations on spectral subtraction. In the end their main benefit was in being able to reduce 50/60 Hz hum but the results were still miserable.

So after banging my head against the wall for a little while I embraced a bit of a paradigm shift and gave up on trying to find a magical filter that would give me a clean signal to feed the pitch detection algorithm.

Onset Locking

Onset Locking

I now use the brief moment right after the note has been plucked to get a decent initial guess of the note being played. This is possible because the initial attack fo the note is fairly loud resulting in a decent signal to noise ratio.

I then use this initial guess to limit the window in which I look for the peak in the auto correlation caused by the note and combine the various measurements using a simple kalman filter.

I named the scheme onset locking in my code, but I’m certain it’s not a new idea.

Making it fast

I hope the O(n²) loop in the auto correlation section made you cringe a bit.

Don’t do it that way. Both basic auto correlation and McLeods take on it (after applying a bit of basic algebra) can be accelerated using the fast fourier transform.

Good bye n squared, hello n log n. :)

Even with the relatively slow FFT implementation I’m using the speed up is between 10 and 100x. So the opimization is definitely worth doing in practice as well.

I’m also using web workers to get the calculations off the main thread and while at it also parallelized.

The result is that the tuner runs fast enough even on my aging Galaxy S7.

What is left to do

Performance in noisy environment is still bad. Using the microphone of a macbook the tuner barely works, if the fan spins up a bit too loudly it will fail completely.

I’d definitely like to improve this in the future but I also have the suspicion that it won’t be trivial, at least without making additional assumptions about the instrument being tuned.

Another front would be to add alternative tunings, and maybe even allow custom tunings. That should be relatively easy to do but I don’t currently have any use for it.

Further reading

McLeod, Philip & Wyvill, Geoff. (2005). A smarter way to find pitch.

McLeod, Philip (2008). Fast, Accurate Pitch Detection Tools for Music Analysis.

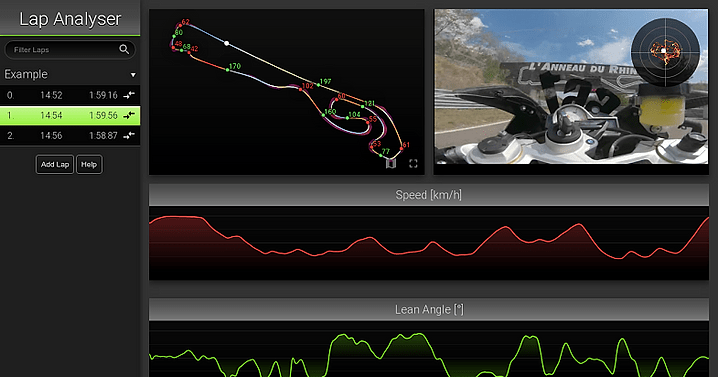

Lap Timer and Analyser for GoPro Videos 31 Dec 2019 8:56 AM (5 years ago)

During the past two years I’ve had the occasional pleasure of riding my motorcycles on race tracks. While I’m still far away from being fast I really enjoy working on my riding.

This led me to considering different data recording and analysis solutions. A bit down the line I realized that the GoPro cameras I already owned actually contain a surprisingly good GPS unit recording data at 18hz. To make things even better GoPro also documented their meta data format and even published a parser for it on GitHub.

I was very curious how far I could get with that data and started to play around with it. Many hours and experiments later I somehow ended up with my own analysis software and filters tuned for the camera data.

It loads, processes and displays the data, all in a web browser.

Data Extraction and Filtering

The data extraction is performed using the gopro-telemetry library by Juan Irache.

For filtering the data I wrote a little library for kalman filters in TypeScript. The Kalman Filter book by Roger Labbe helped a lot in learning about the topic. I highly recommend it if you want to dive into the topic yourself.

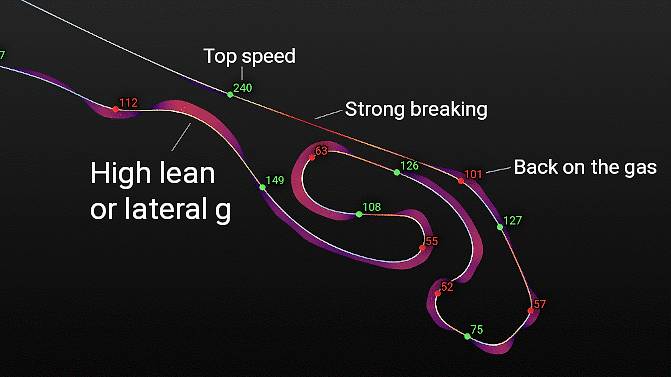

In addition to the obvious line plots of speed, acceleration and lean angles I also implemented a detailed map and what I call a G Map.

Lap Map

The lap map shows the line, speeds, breaking points and g forces in a spatial context. I especially like the shaded areas in the corners. They show the lateral (distance from the line) and total (color of the area) forces at play.

Looking at the map is a quick way to gauge how close to the limit one is potentially riding in each of the corners.

G-Map

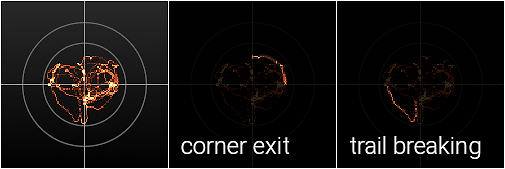

The G-Map is a histogram of the G-Force acting on the vehicle over time. It can be used to gauge how close to the limit a rider is riding.

Assuming a perfect world where vehicles have isotropic grip, the vehicle sufficient power and the rider always operating it at the limit it would trace out a perfect circle, resembling the Circle of forces.

As you can see my example above is far away from that. It shows conservative riding, always staying within the 1 g that warm track tires can easily handle. It also shows that I still have a lot to learn with regards to consistency.

It also shows that the track in question has more right turns than left.

Future Plans

There is of course a lot more that can be done here.

The GoPro also has an accelerometer and gyro which could be integrated into the filters to yield more accurate results.

The additional data would yield the actual lean angle which could then be used in combination with the lateral acceleration to gauge the effectiveness of the body position/hangoff of the rider.

I also have another version of this running on a Raspberry PI coupled to an external GPS receiver. This combination results in an all in one integrated data recording solution. One can simply connect to the WiFi hotspot of the little computer onboard the vehicle and view the most recent sessions.

It’s rather nice because it doesn’t require the camera to be running all the time and is quite simple to use. The drawback is that it requires fiddling together a bunch of hardware which I guess most people don’t want to deal with.

Disclaimer

There are two things that need to be said here.

First off this is not a perfect solution and even if it was it couldn’t definitely answer the question of how close to the limit the rider is. Factors like the track surface, weight transfer and other shenanigans are not accounted for.

Secondly, this product and/or service is not affiliated with, endorsed by or in any way associated with GoPro Inc. or its products and services. GoPro, HERO and their respective logos are trademarks or registered trademarks of GoPro, Inc.

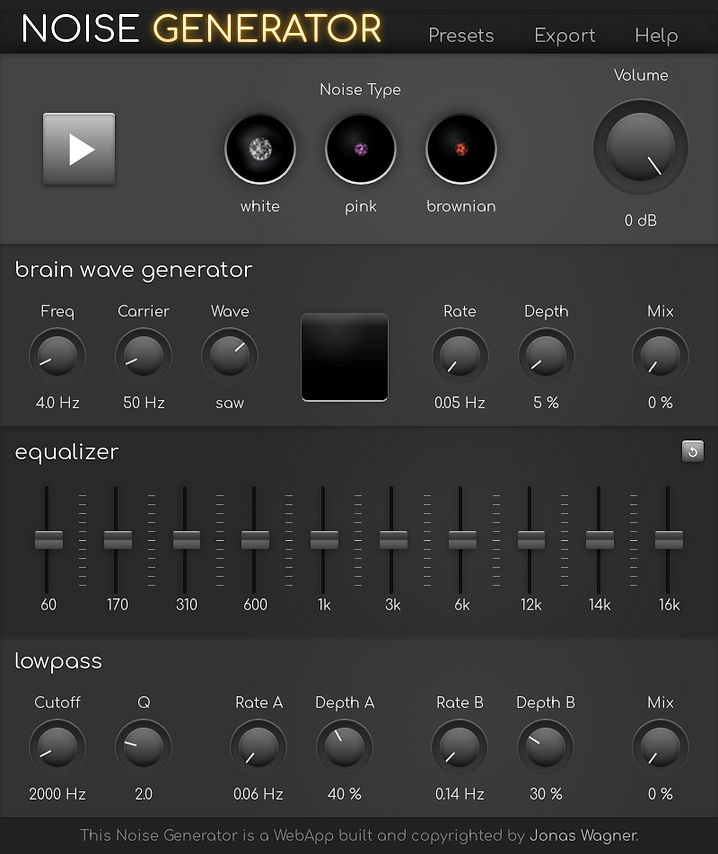

I made myself a noise generator 25 Aug 2019 6:29 AM (6 years ago)

It’s been a while since the last release but I finally finished something again.

Noise tends to eject me from my focus and flow and sometimes noise canceling headphones just aren’t enough to prevent it. In those instances I often mask the remaining noise with less distracting pure noise.

There already are various tools for this purpose, so there isn’t really a strict need for another one. I just wanted to have some fun and build something that does exactly what I want and looks pretty while doing it. As a nice bonus it gave me an opportunity to play with some more recent web technologies.

I don’t expect this to be useful to particularly many people other than myself but that’s why it’s a spare time project. :)

If you want to learn a bit more about it there is a little info on the noise generator help page.

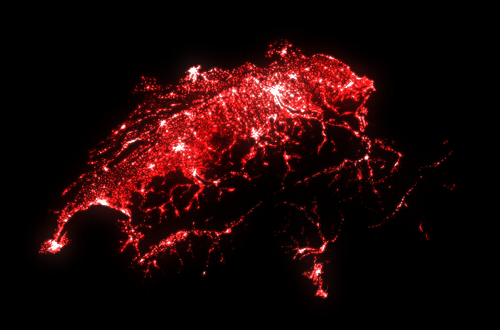

Urban Astrophotography 25 Jun 2017 11:14 AM (8 years ago)

The milkyway core over Zurich.

The milkyway core over Zurich.

One of the first lessons in astrophotography is that you better find a dark place, far away from the lights of civilization if you want to take good pictures of the night sky.

Wouldn’t it be beautiful if it was possible to photograph the Milky Way in the middle of a city?

I wanted to try.

Step by Step

I packed my camera onto my bike and rode into night to take a few photos. This is what they looked like after I developed them using RawTherapee.

Straigh out of camera

When you take a picture of the night sky in a city this is about what you will get. At least we can see Saturn and a few stars. Let’s try to peek through the haze.

The first step is to collect more light. The more light we capture with our camera the easier it will be to separate the photons coming from the nebulae in the galactic center from the noise. We can gather more light by capturing more photographs. The only problem is of course that the stars are moving.

The stars are moving

We can fix this problem by aligning the images based on the stars. I used Hugin for this job.

The earth slowly turning

The next step is to combine (stack) all of the images into one. The ground will look blurry because it moves but the stars will remain sharp. I used Siril for this task.

Now this is where the magic happens. We remove the ground and stars from the image and then blur it a lot.

All this image now contains is the light pollution. Let’s subtract1 it.

With all of the light pollution gone darkness remains.

Now we can amplify the faint light in the image, increase contrast and denoise.

Finally we add the recovered light back to one of the original images and apply some final tweaks.

Why this is possible

This is possible because of two main reasons. Light pollution is the result of light being scattered (light bouncing of particles in the air) in the air. Unlike for instance dense smoke, light pollution does not block the light from the glowing gas clouds of the Milky Way. This means that the signal is still there just very weak compared to the city lights.

The other reason is that the light pollution, especially higher above the horizon becomes more and more even. That’s the property that allows us to separate it from the more focused light of the stars and nebula using a high pass filter.

Settings & Equipment

In case you are curious about the equipment and settings used: Nikon D810, Samyang 24/1.4 @ 2.8, ISO 100, 9 pictures @ 20s, combined using winsorized sigma clipping.

Conclusions

The result is definitely noisy and not of the highest quality but still it amazes me, that this is even possible.

A consumer grade camera and free software can reveal the center of our home galaxy behind the bright haze of city lights, showing us our place in our galaxy and the the universe beyond.

I’m curious how much farther I can push this technique with deliberately chosen framing, tweaked settings, more exposures and maybe a Didymium filter.

Further Reading

If you want to learn about astrophotography in general I recommend you to read lonelyspeck.com. Ian is a much better writer than I will ever be and he has written a lot of great articles.

1: In practice you want to use grain extract/merge here since subtraction in most graphics software clips negative values to zero.

JPEG Forensics in Forensically 5 Feb 2017 4:53 AM (8 years ago)

In this brave new world of alternative facts the people need the tools to tell true from false.

Well either that or maybe I was just playing with JPEG encoding and some of that crossed over into my little web based photo forensics tool in the form of some new tools. ;)

JPEG Comments

The JPEG file format contains a section for comments marked by 0xFFFE (COM). These exist in addition to the usual Exif, IPTC and XMP data. In some cases they can contain interesting information that is either not available in the other meta data or has been stripped.

For instance images from wikipedia contain a link back to the image:

File source: https://commons.wikimedia.org/wiki/File:...

Older versions of Photoshop also seem to leave a JPEG Comment too

File written by Adobe Photoshop 4.0

Some versions of libgd (commonly used in PHP web applications) seem to leave comments indicating the version of the library used and the quality the image was saved at:

CREATOR: gd-jpeg v1.0 (using IJG JPEG v62), quality = 90

The JPEG Analysis in Forensically allows you to view these.

Quantization Tables

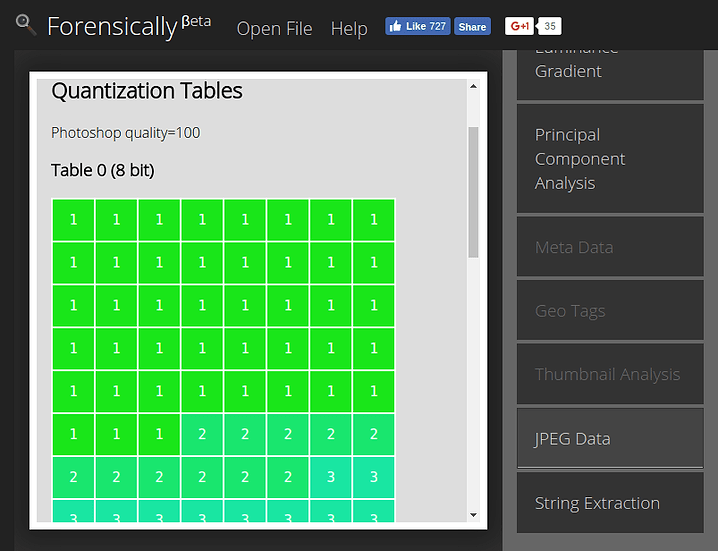

This is probably the most interesting bit of information revealed by this new tool in Forensically.

A basic understanding of how JPEG works can help in understanding this tool so I will try to give you some intuition using the noble art of hand waving.

If you already understand JPEG you should probably skip over this gross oversimplification.

JPEG is in general a lossy image compression format. It achieves good compression rates by discarding some of the information contained in the original image.

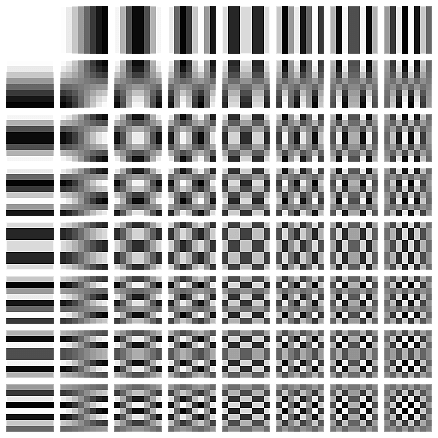

For this compression the image is divided in 8x8 pixel blocks. Rather than storing the individual pixel values for each of the 64 pixels in the block directly JPEG saves how much they are like one of 64 fixed “patterns” (coefficients). If these patterns are chosen in the right way this transform is still essentially lossless (except for rounding errors) meaning you can back the original image by combining these patterns.

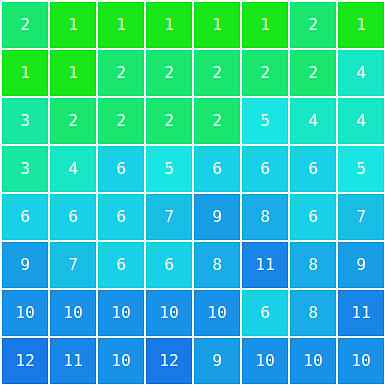

JPEG Patterns

JPEG DCT Coefficients by Devcore (Public Domain)

Now that the image is expressed in terms of these patterns JPEG can selectively discard some of the detail in the image.

How much information about which pattern is discarded is defined in a set of tables that is stored inside of each JPEG image. These tables are called quantization tables.

Example quantization table for quality 95

There are some suggestions in the JPEG standard on how to calculate these tables for a given quality value (1-99). As it turns out not everyone is using these same tables and quality values.

This is good for us as it means that by looking at the quantization tables used in a JPEG image we can learn something about the device that created the JPEG image.

Identifying manipulated images using JPEG quantization tables

Most computer software and internet services use the standard quantization tables. The very notable exception to this rule are Adobe products, namely Photoshop. This means that we can detect images that have been last saved using Photoshop just by looking at their quantization tables.

Many digital camera manufacturers also have their own secret sauce for creating quantization tables. Meaning that by comparing the quantization tables between different images taken with the same type of camera and setting we can identify whether an image was potentially created by that camera or not.

Automatic identification of quantization tables

Forensically currently automatically identifies quantization tables that have been created according to the standard. In that case it will display Standard JPEG Table Quality=95.

It does also automatically recognize some of the quantization tables used by photoshop.

In this case it will display Photoshop quality=85.

I’m missing a complete set of sample images for older photoshop versions using the 0-12 quality scale. If you happen to have one and would be willing to share it please let me know.

If the quantization table is not recognized it will output Non Standard JPEG Table, closest quality=82 or Unknown Table.

Summary

JPEG images contain tables that specify how the image was compressed. Different software and devices use different quantization tables therefore by looking at the quantization tables we can learn something about the device or software that saved the image.

Additional Resources

- Presentation Using JPEG Quantization Tables to Identify Imagery Processed by Software by Jesse Kornblum

- Paper Using JPEG Quantization Tables to Identify Imagery Processed by Software by Jesse Kornblum

- Digital Image Ballistics from JPEG Quantization by Hany Farid

Structural Analysis

In addition to the quantization tables the order of the different sections (markers) of a JPEG image also reveal detail about it’s creation. In short images that were created in the same way should in general have the same structure. If they don’t it’s an indication that the image may have been tampered with.

String Extraction

Sometimes images contain (meta) data in odd places. A simple way to find these is to scan the image for sequences of sensible characters. A traditional tool to do this is the strings program in Unix-like operating systems.

For example I’ve found images that have been edited with Lightroom that contained a complete xml description of all the edits done to the image hidden in the XMP metadata.

Facebook Meta Data

When using this tool on an image downloaded from facebook one will often find a string like

FBMD01000a9...

From what I can tell this string is present in images that are uploaded via the web interface. A quick google does not reveal much about it’s contents. But it’s presence is a good indicator that an image came from facebook.

I might add a ‘facebook detector’ that looks for the presence & structure of these fields in the future.

Poke around using these new tools and see what you can find! :)

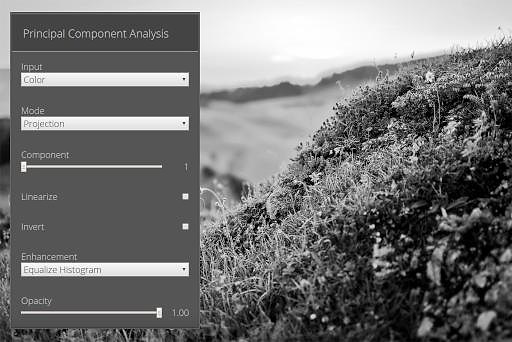

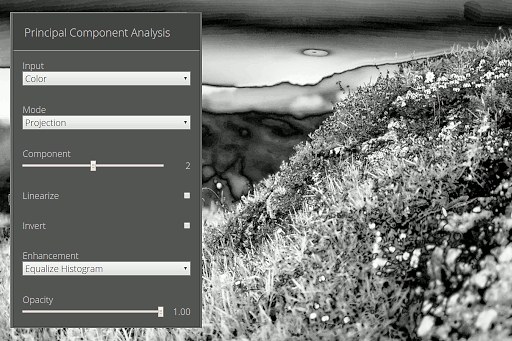

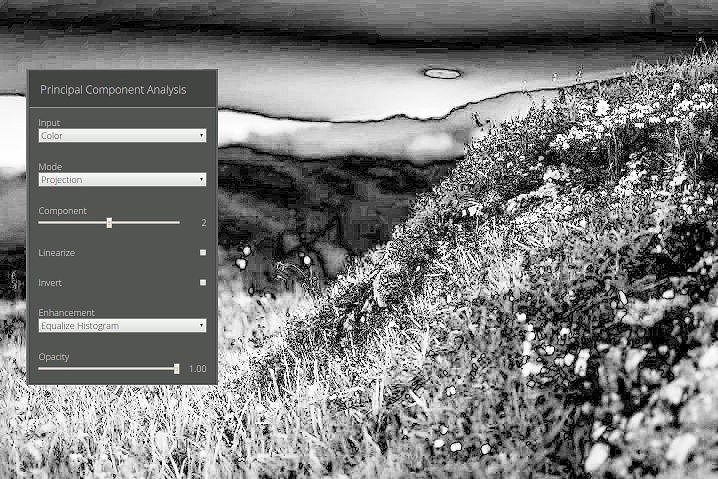

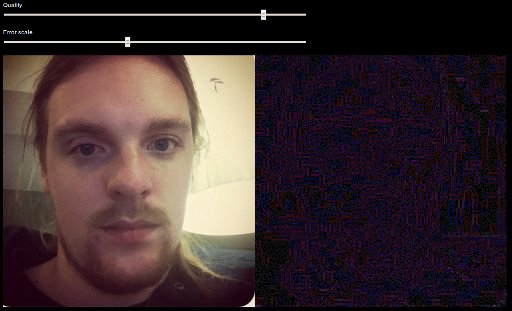

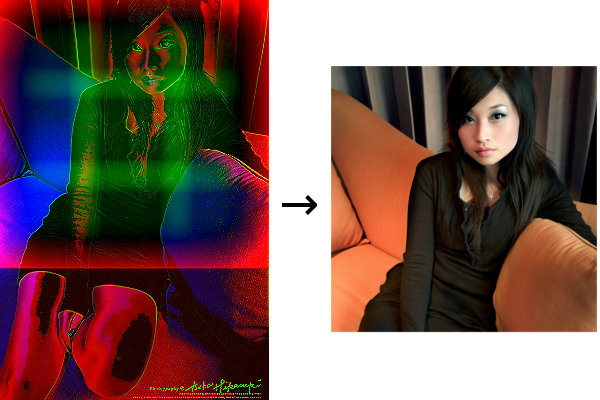

Principal Component Analysis for Photo Forensics 11 Aug 2016 3:43 AM (9 years ago)

As mentioned earlier I have been playing around with Principal Component Analysis (PCA) for photo forensics. The results of this have now landed in my Photo Forensics Tool.

In essence PCA offers a different perspective on the data which allows us to find outliers more easily. For instance colors that just don’t quite fit into the image will often be more apparent when looking at the principal components of an image. Compression artifacts do also tend to be far more visible, especially in the second and third principal components. Now before you fall asleep, let me give you an example.

Example

This is a photo that I recently took:

To the naked eye this photo does not show any clear signs of manipulation. Let’s see what we can find by looking at the principal components.

First Principal Component

Still nothing suspicious, let’s check the second one:

Second Principal Component

And indeed this is where I have removed an insect flying in front of the lens using the inpainting algorithm algorithm (content aware fill in photoshop speak) provided by G’MIC. If you are interested Pat David has a nice tutorial on how to use this in the GIMP.

Resistance to Compression

This technique does still work with more heavily compressed images. To illustrate this I have run the same analysis I did above on the smaller & more compressed version of the photo used in this article rather than the original. As you can clearly see the anomaly caused by the manipulation is still present and quite clear but not as clear as when analyzing a less compressed version of the image. You can also see that the PCA is quite good at revealing the artifacts caused by (re)compression.

Further Reading

If you found this interesting you should consider reading my article Black and White Conversion using PCA which introduces a tool which applies the very same techniques to create beautiful black and white conversions of photographs.

If you want another image to play with try the one in this post by Neal Krawetz is interesting. It can be quite revealing. :)

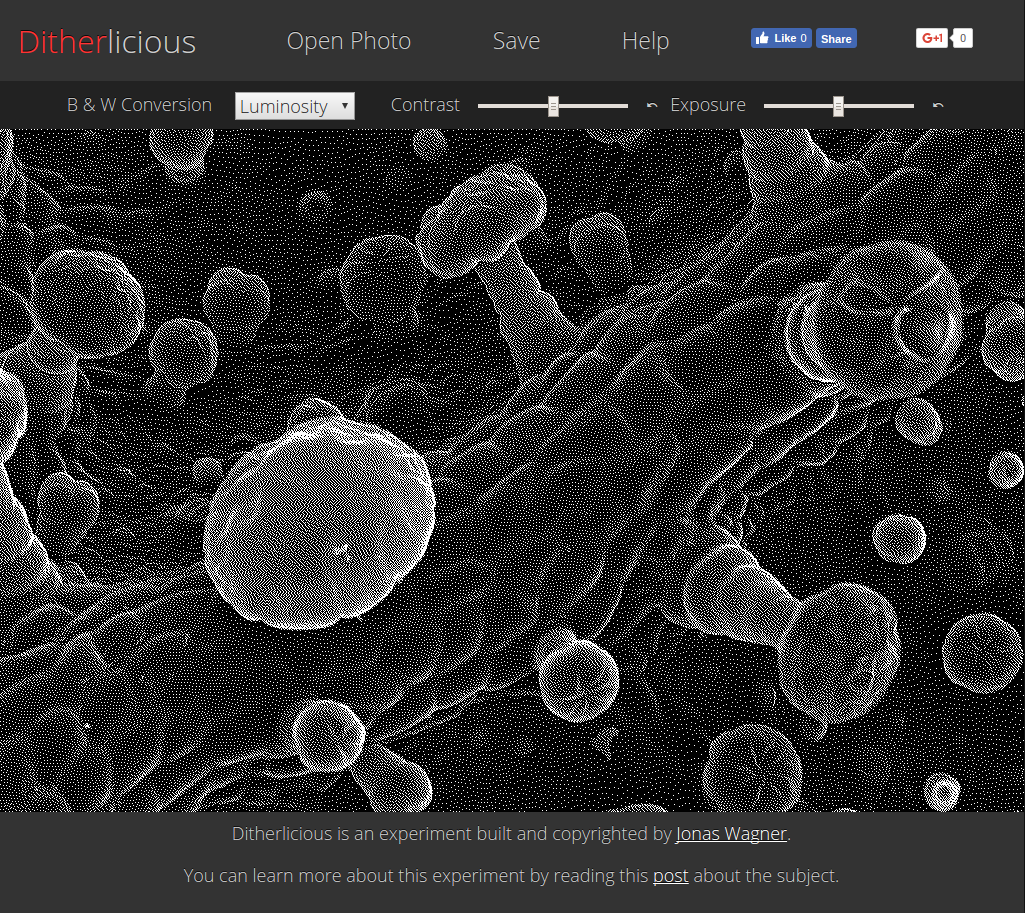

Ditherlicious - 1 Bit Image Dithering 4 Aug 2016 2:29 AM (9 years ago)

While experimenting with Black and White Conversion using PCA I also investigated dithering algorithms and played with those. I found that Stucki Dithering would yield rather pleasant results. So I created a little application for just that: Ditherlicious.

Open Ditherlicious

Photo by Tuncay (CC BY)

I hope you enjoy playing with it. :)

Black and White Conversion using PCA 8 Jul 2016 3:55 AM (9 years ago)

I have been hacking on my photo forensics tool lately. I found a few references that suggested that performing PCA on the colors of an image might reveal interesting information hidden to the naked eye. When implementing this feature I noticed that it did a quite good job at doing black & white conversions of photos. Thinking about this it does actually make some sense, the first principal component maximizes the variance of the values. So it should result in a wide tonal range in the resulting photograph. This led me to develop a tool to explore this idea in more detail.

This experimental tool is now available for you to play with:

29a.ch/sandbox/2016/monochrome-photo-pca/.

To give you a quick example let’s start with one of my own photographs:

While the composition with so much empty space is debatable, I find this photo fairly good example of an image where a straight luminosity conversion fails. This is because the really saturated colors in the sky look bright/intense even if the straight up luminosity values do not suggest that.

In this case the PCA conversion does (in my opinion) a better job at reflecting the tonality in the sky. I’d strongly suggest that you experiment with the tool yourself.

If you want a bit more detail on how exactly the conversions work please have a look at the help page.

Do I think this is the best technique for black and white conversions? No. You will always be able to get better results by manually tweaking the conversion to fit your vision. Is it an interesting result? I’d say so.

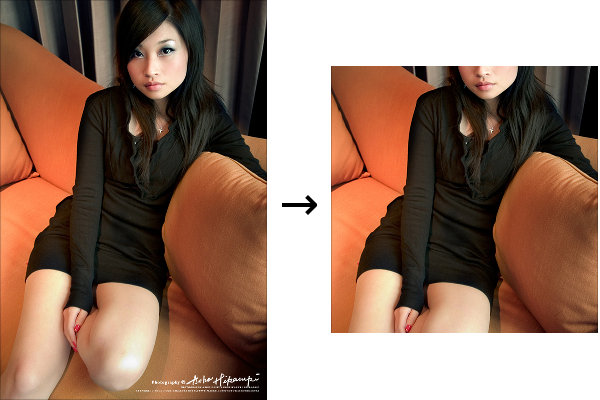

Smartcrop.js 1.0 25 Jun 2016 9:59 AM (9 years ago)

I’ve just released version 1.0 of smartcrop.js. Smartcrop.js is a javascript library I wrote to perform smart image cropping, mainly for generating good thumbnails. The new version includes much better support for node.js by dropping the canvas dependency (via smartcrop-gm and smartcrop-sharp) as well as support for face detection by providing annotations. The API has been cleaned up a little bit and is now using Promises.

Another little takeaway from this release is that I should set up CI even for my little

open source projects. I come to this conclusion after having created a

dependency mess using npm link locally which lead to everything working

fine on my machine but the published modules being broken. I’ve already set

up travis for smartcrop-gm,

smartcrop-sharp and

simplex-noise.js.

More of my projects are likely to follow.

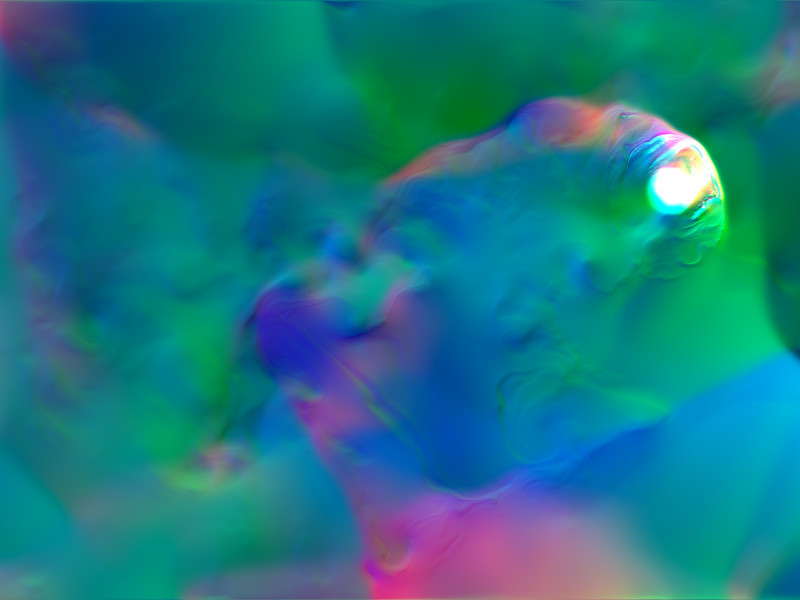

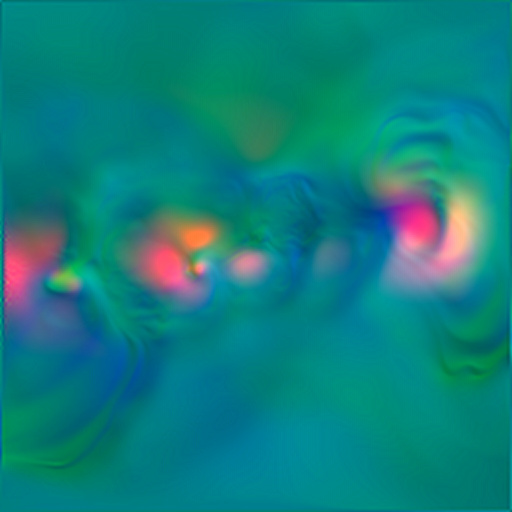

Normalmap.js Javascript Lighting Effects 13 Mar 2016 1:45 PM (9 years ago)

Back in 2010 I did a little experiment with normal mapping and the canvas element.

The normal mapping technique makes it possible to create interactive lighting effects based on textures.

Looking for an excuse to dive into computer graphics again,

I created a new version of this demo.

Back in 2010 I did a little experiment with normal mapping and the canvas element.

The normal mapping technique makes it possible to create interactive lighting effects based on textures.

Looking for an excuse to dive into computer graphics again,

I created a new version of this demo.

This time I used WebGL Shaders and a more advanced physically inspired material system based on publications by Epic Games and Disney. I also implemented FXAA 3.11 to smooth out some of the aliasing produced by the normal maps. The results of this experiment are now available as a library called normalmap.js. Check out the demos. It’s a lot faster and better looking than the old canvas version. Maybe you find a use for it. :)

Demos

You can view larger and sharper versions of these demos on 29a.ch/sandbox/2016/normalmap.js/.

You can get the source code for this library on github.

Future

I plan to create some more demos as well as tutorials on creating normalmaps in the future.

Let's encrypt 29a.ch 27 Dec 2015 3:51 PM (9 years ago)

I migrated this website HTTPS using certificates by Let’s Encrypt. This has several benefits. The one I’m most excited about is being able to use Service Workers to provide offline support for my little apps.

Let’s Encrypt is an amazing new certificate authority which allows you to install a SSL/TLS certificate automatically and for free. This means getting a certificate installed on your server can be as little work as running a command on your server:

./letsencrypt-auto --apache

The service is currently still in beta but as you can hopefully see the certificates it produces are working just fine. I encourage you to give it a try.

If anything on this website got broken because of the move to HTTPS, please let me know!

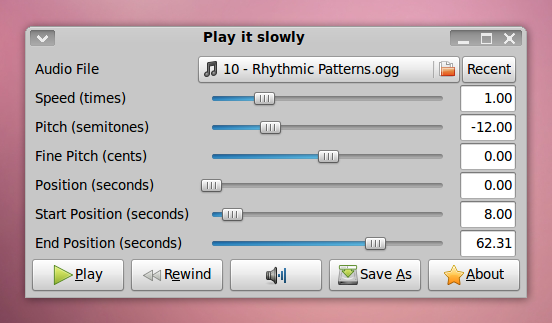

Play it Slowly 22 Dec 2015 9:12 AM (9 years ago)

Play it Slowly is a software to play back audio files at a different speed or pitch. It does also allow you to loop over a certain part of a file. It's intended to help you learn or transcribe songs. It can also play videos thanks to gstreamer. Play it slowly is intended to be used on a GNU/Linux system like Ubuntu.

Features

- Plays every file gstreamer does (mp3, ogg vorbis, midi, even flv!)

- Can use alsa and jack

- Change speed and pitch

- Loop over certain parts

- Export to wav

Screenshot

Alternative

I also created a free web based alternative to play it slowly called Timestretch Player. It features higher quality time stretching and a more fancy UI. Timestretch Player works on Windows too.

Download (Source Code)

If possible install Play it Slowly via the package manager of your linux distribution!

- playitslowly-1.5.1.tar.gz (Python3, GTK3, GStreamer 1)

- playitslowly-1.4.0.tar.gz (Legacy)

Packages

Development

Feel free to join the development of playitslowly at github.

Time Stretching Audio in Javascript 6 Dec 2015 8:00 AM (9 years ago)

Seven years ago I wrote a piece of software called Play it Slowly. It allows the user to change the speed and pitch of an audio file independently. This is useful for example for practicing an instrument or doing transcriptions.

Now I created a new web based version of Play it Slowly called TimeStretch Player. It’s written in Javascript and using the WebAudio API.

It features (in my opinion) much better audio quality for larger time stretches as well as a 3.14159 × cooler looking user interface. But please note that this is beta stage software it’s still far from perfect and polished.

How the time stretching works

The time stretching algorithm that I use is based on a Phase Vocoder with some simple improvements.

It works by cutting the audio input into overlapping chunks, decomposing those into their individual components using a FFT, adjusting their phase and then resynthesizing them with a different overlap.

Oversimplified Explanation

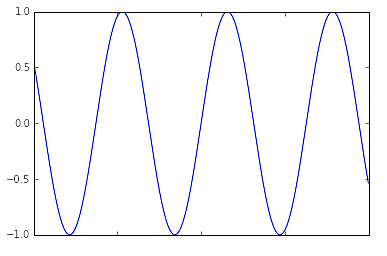

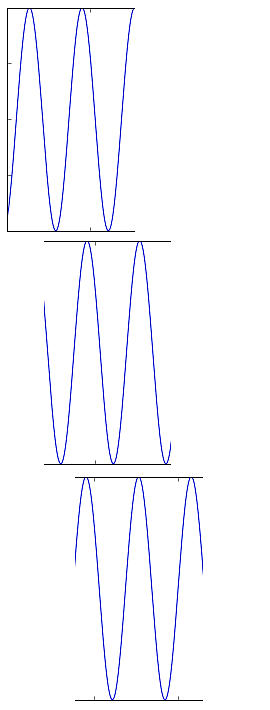

Suppose we have a simple wave like this:

We can cut it into overlapping pieces like this:

By changing the overlap between the pieces we can do the time stretching:

This messes up the phases so we need to fix them up:

Now we can just combine the pieces to get a longer version of the original:

In practice things are of course a bit more complicated and there are a lot of compromises to be made. ;)

Much better explanation

If you want a more precise description of the phase vocoder technique than the handwaving above I recommend you to read the paper Improved phase vocoder time-scale modification of audio by Jean Laroche and Mark Dolson. It explains the basic phase vocoder was well as some simple improvements.

If you do read it and are wondering what the eff the angle sign used in an expression like  means:

It denotes the phase - in practice the argument of the complex number of the term.

I think it took me about an hour to figure that out with any certainty.

YAY mathematical notation.

means:

It denotes the phase - in practice the argument of the complex number of the term.

I think it took me about an hour to figure that out with any certainty.

YAY mathematical notation.

Pure CSS User Interface

I had some fun by creating all the user interface elements using pure CSS, no images are used except for the logo. It’s all gradients, borders and shadows. Do I recommend this approach? Not really, but it sure is a lot of fun! Feel free to poke around with your browsers dev tools to see how it was done.

Future Features

While developing the time stretching functionality I also experimented with some other features like a karaoke mode that can cancel individual parts of an audio file while keeping the rest of the stereo field intact. This can be useful to remove or isolate parts of songs for instance the vocals or a guitar solo. However, the quality of the results was not comparable to the time stretching so I decided to remove the feature for now. But you might get to see that in another app in the future. ;)

Library Release

I might release the phase vocoder code in a standalone node library in the future but it needs a serious cleanup before that can happen.

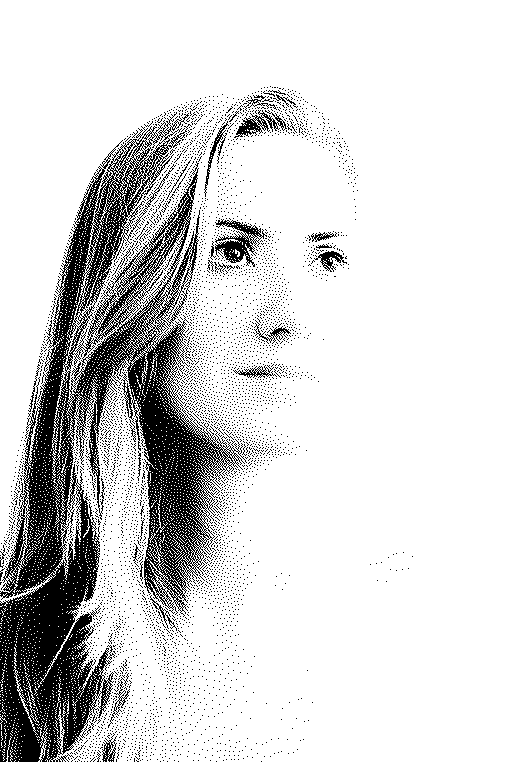

Noise Analysis for Image Forensics 21 Aug 2015 12:32 PM (10 years ago)

People seem to be liking Forensically. I enjoy hacking on it. So upgraded it with a new tool - noise analysis. I also updated the help page and implemented histogram equalization for the magnifier/ELA.

Noise Analysis

The basic idea behind this tool is very simple. Natural images are full of noise. When they are modified this often leaves visible traces in the noise in an image. But seeing the noise in an image can be hard. This is where the new tool in forensically comes in. It takes a very simple noise reduction filter (a separable Median Filter) and reverses it's results. Rather than removing the noise it removes the rest of the image.

One of the benefits of this tool is that it can recognize modifications like Airbrushing, Warps, Deforms, transformed clones that the aclone detection and error level analysis might not catch.

Please be aware that this is still a work in progress.

Example

Enough talk, let me show you an example. I gave myself a nosejob with the warp tool in gimp, just for you.

As you can see the effect is relatively subtle. Not so the traces it leaves in the noise!

The resampling done by the warp tool looses some of the high frequency noise, creating a black halo around the region.

Can you find any anomalies in the demo image using noise analysis?

A bit of code

I guess that many of the readers of this blog are fellow coders and hackers. So here is a cool hack for you. I found this in some old sorting code I wrote for a programming competition but I don't remember where I had it from originally. In order to make the median filter fast we need a fast way to find the median of three variables. A stupidly slow way to go about this could look like that:

// super slow

[a, b, c].sort()[1]Now the obvious way to optimize this would be to transform it into a bunch of ifs. That's going to be faster. But can we do even better? Can we do it without branching?

// fast

let max = Math.max(Math.max(a,b),c),

min = Math.min(Math.min(a,b),c),

// the max and the min value cancel out because of the xor, the median remains

median = a^b^c^max^min;

Mina and max can be computed without branching on most if not all cpu architectures. I didn't check how it's handled in javascript engines. But I can show you that the second approach is ~100x faster with this little benchmark.

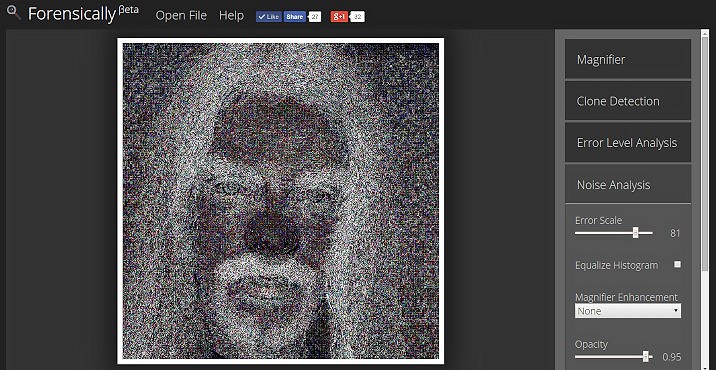

Forensically, Photo Forensics for the Web 16 Aug 2015 12:21 PM (10 years ago)

Back in 2012 I hacked to together a little tool for performing Error Level Analysis on images. Despite being such a simple tool with, frankly, a bad UI it has been used by over 250'000 people.

A few days ago I randomly stumbled across the paper Detection of Copy-Move Forgery in Digital Images by Jessica Fridrich, David Soukal, and Jan Lukáš. I wanted to see if I could do something similar and make it run in a browser. It took a good bit of tweaking but I ended up with something that works. I took a copy of my photo film emulator as a base for the UI, adapted it a bit, ported the old ELA code and added some new tools. The result is called Forensically.

How to use Forensically

If you want some guidance on how to use forensically you get to pick your poison. On offer is a 12 minute monologue in form of a tutorial video or a whole bunch of cryptic text on the help page. I'm sorry that neither are very good.

How the Clone Detection works

I guess the most interesting feature of this new tool is the clone detection. So let me reveal to you how I made it work. I will try to keep the explanation simple. If there is interest in it I might still write a more technical description of the algorithm later.

The basic idea

Create a Table

Move a window over the image, for each position of the window

Use all of the pixels in the window as a key

If the key is already in the table

We found a clone! Mark it.

Else

Add the key to the table

This does actually work, but it will only find perfect copies. We want the matching to be more fuzzy.

Compression

So the next key step is to make the matching more fuzzy. We do this by compressing the key to make it less unique. You can think of this step as converting each of the little blocks into a tiny JPEG and then using those pixels as a key. The actual implementation is using Haar wavelets for this step. You can see the compressed blocks that are used by clicking on Show Quantized Image in the Clone Detection Tool.

This works too but now we have too many results!

Filtering

So the next step is to filter all of the blocks and to throw away the boring ones. This is done by comparing the amount of detail in the high frequencies to a threshold. You can think of it as subtracting a blurred image of the block from the block and then looking at how much is left of the pixels. In practice the blurring is not required because the wavelet step has already done it for us. You can see the rejected blocks as black spots in the quantized image.

At this stage the algorithm works but it does still show a lot of uninteresting copies of blocks that just happen to look similar.

Clustering

So now we take another look at all of the clones that we found. If the distance between the source and destination is too small we reject them. Next we look at clones that start from a similar place and are copied into a similar direction. If we find less than Minimal Cluster Size other clones that are similar we discard the clone as noise.

Source Code

I haven't figured out how I want to license the code and assets yet. But I do plan to release it in some form.

Feedback

As always, feedback is appreciated both on the app and on the post. Would you like future posts to be more in depth and technical or do you like the current format?

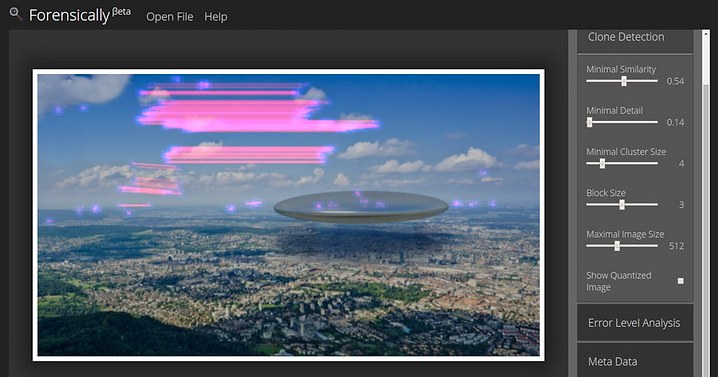

Light Leaks in the Film Emulator 28 Jul 2015 3:20 PM (10 years ago)

Inspired by the feature in the recent release of g'mic, I added a new feature to my Film Emulator, light leaks.

G'mic seems to use predefined images for the light leaks. I decided to go another route and created a procedural version of it. The benefits are clear: Now big images to download, and an infinite variation of light leaks.

The implementation is also rather straight forward, in fact it shares most of the code with the already existing grain code. It uses simplex noise at a fairly low frequency to create a colored plasma. Three octaves of simplex noise are used for the luminance, and a single octave of simplex noise is used to randomize each color channel. This was just my first approximation but in my opinion it works better than it has any right to, so I'll stick to it for now.

Javascript Film Emulation 7 Jun 2015 12:36 PM (10 years ago)

I hacked together a little analog film emulation tool in Javascript. It's based on the awesome work of Pat David. I wrote it mainly to play with some new tech but I liked the result enough to share it with you. You can try it here:

It also works on android phones running chrome, give it a try!

How the Film Emulation works

I guess the most interesting part for most people is the actual film emulation code. It's using Color Lookup Tables (cluts).

So in simplistic terms:

For every pixel in the image Take it's color values r, g, b Look up it's new color value in the lookup table r', g', b' = colorLookupTable[r, g, b] Set the pixel to the color values (r', g', b')

In practice there are a few more considerations. Most cluts don't contain values for all 16 777 216 (224) colors in the rgb space. A simplistic solution to this problem would be to always just use the closest color (nearest-neighbor interpolation). This is fast but results in very ugly banding artifacts.

So to keep things fast I use random dithering for the previews and trilinear filtering for the final output. The random dithering is probably a suboptimal choice, but it was easy to implement.

You can find more details about how the lookup tables were create on Pat Davids website.

Technology

As stated at the beginning I wrote this application to play with new technology, so there is a lot going on in this little application.

The entire code is written in Javascript (ES6 to be precise). Which is then converted to more mainstream javascript using babel.js.

It is using the canvas API for accessing the pixel data of images and then processes them in web workers for parallelism using transferable objects to avoid copies.

WebGL would obviously also be suitable for this task, I might even write an implementation in the future

The css makes heavy use of flexible boxes and is written in scss. The icon font was generated using fontello.

The whole thing is built using grunt and browserify.

Of course these are just a few of the bits of tech that I played with to make this append. If you want to know even more, just look at the source.

Source Code

You can find the source code of this tool on github. The code is not licensed under an open source license and does not come with all the data files in order to prevent lazy people from just copying everything and pretending it is their own work. You are of course free to study the code and takes bits and pieces, I consider this fair use. Just attribute them to me properly. If you have grander plans for it and the lack of a license prevents you from following up on them feel free to contact me.

Full-text search example using lunr.js 3 Dec 2014 1:00 PM (10 years ago)

I did a little experiment today. I added full-text search to this website using lunr.js. Lunr is a simple full-text search engine that can run inside of a web browser using Javascript.

Lunr is a bit like solr, but much smaller and not as bright, as the author Oliver beautifully puts it.

With it I was able to add full text search to this site in less than an hour. That's pretty cool if you ask me. :)

You can try out the search function I built on the articles page of this website.

I also enabled source maps so you can see how I hacked together the search interface. But let me give you a rough overview.

Indexing

The indexing is performed when I build the static site. It's pretty simple.

// create the index

var index = lunr(function(){

// boost increases the importance of words found in this field

this.field('title', {boost: 10});

this.field('abstract', {boost: 2});

this.field('content');

// the id

this.ref('href');

});

// this is a store with some document meta data to display

// in the search results.

var store = {};

entries.forEach(function(entry){

index.add({

href: entry.href,

title: entry.title,

abstract: entry.abstract,

// hacky way to strip html, you should do better than that ;)

content: cheerio.load(entry.content.replace(/<[^>]*>/g, ' ')).root().text()

});

store[entry.href] = {title: entry.title, abstract: entry.abstract};

});

fs.writeFileSync('public/searchIndex.json', JSON.stringify({

index: index.toJSON(),

store: store

}));The resulting index is 1.3 MB, gzipping brings it down to a more reasonable 198 KB.

Search Interface

The other part of the equation is the search interface. I went for some simple jQuery hackery.

jQuery(function($) {

var index,

store,

data = $.getJSON(searchIndexUrl);

data.then(function(data){

store = data.store,

// create index

index = lunr.Index.load(data.index)

});

$('.search-field').keyup(function() {

var query = $(this).val();

if(query === ''){

jQuery('.search-results').empty();

}

else {

// perform search

var results = index.search(query);

data.then(function(data) {

$('.search-results').empty().append(

results.length ?

results.map(function(result){

var el = $('<p>')

.append($('<a>')

.attr('href', result.ref)

.text(store[result.ref].title)

);

if(store[result.ref].abstract){

el.after($('<p>').text(store[result.ref].abstract));

}

return el;

}) : $('<p><strong>No results found</strong></p>')

);

});

}

});

});

Learn More

If you want to learn more about how lunr works I recommend you to read this article by the author.

If you still want to learn more about search, then I can recommend this great free book on the subject called Introduction to Information Retrieval by Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze.

Source Maps with Grunt, Browserify and Mocha 20 Nov 2014 2:32 AM (10 years ago)

If you have been using source maps in your projects you have probably also encountered that they do not work with all tools. For instance, if I run my browserifyd tests with mocha I get something like this:

ReferenceError: dyanmic0 is not defined

at Context.<anonymous> (http://0.0.0.0:8000/test/tests.js:4342:44)

at callFn (http://0.0.0.0:8000/test/mocha.js:4428:21)

at timeslice (http://0.0.0.0:8000/test/mocha.js:5989:27)

...

That's not exactly very helpful. After some searching I found a node module to solve this problem: node-source-map-support. It's easy to use and magically makes things work.

Simply:

npm install --save-dev source-map-supportrequire('source-map-support').install();I place it in a file called dev.js that I include in all development builds.

Now you get nice stack traces in mocha, jasmine, q and most other tools:

ReferenceError: dyanmic0 is not defined

at Context.<anonymous> (src/physics-tests.js:44:1)

...

Nicely enough this also works together with Qs long stack traces:

require('source-map-support').install();

var Q = require('q');

Q.longStackSupport = true;

Q.onerror = function (e) {

console.error(e && e.stack);

};

function theDepthsOfMyProgram() {

Q.delay(100).then(function(){

}).done(function explode() {

throw new Error("boo!");

});

}

Will result in:

Error: boo!

at explode (src/dev.js:12:1)

From previous event:

at theDepthsOfMyProgram (src/dev.js:11:1)

at Object./home/jonas/dev/sandbox/atomic-action/src/dev.js.q (src/dev.js:16:1)

...

That's more helpful. :) Thank you Evan!

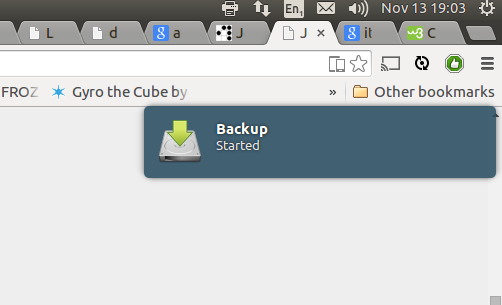

Desktop rdiff-backup Script 13 Nov 2014 7:50 AM (10 years ago)

Updated for Ubuntu 15.10.

I have recently revamped the way I backup my desktop. In this post I document the thoughts that went into this. This is mostly for myself but you might still find it interesting.

To encrypt or not to encrypt

I do daily incremental backups of my desktop to an external harddrive. This drive is unencrypted.

Encrypting your backups has obvious benefits - it protects your data from falling into the wrong hands. But at the same time it also makes your backups much more fragile. A single corrupted bit can spell disaster for anything from a single block to your entire backup history. You also need to find a safe place to store a strong key - no easy task.

Most of my data I'd rather have stolen than lost. A lot of it is open source anyway. :)

The data that I'd rather lose than having it fall into the wrong hands (mostly keys) is stored and backed up in encrypted form only. For this I use the gpg agent and ecryptfs.

Encrypting only the sensitive data rather than the whole disk increases the risk of it being leaked. Recovering those leaked keys will however require a fairly powerful adversary which would have other ways of getting his hands on that data anyway so I consider this strategy to be a good tradeoff.

As a last line of defense I have an encrypted disk stored away offsite. I manually update it a few times a year to reduce the chance of loosing all of my data in case of a break in, fire or another catastrophic event.

Before showing you the actual backup script I'd like to explain you to why I'm back to using rdiff-backup for my backups.

Duplicity vs rdiff-backup vs rsync and hardlinks

Duplicity and rdiff backup are some of the post popular options to do incremental backups on linux (ignoring the more enterprisey stuff like bacula). Using rsnapshot which is using rsync and hardlinks is another one.

The main drawback to using rsync and hardlinks is that it stores full copies of every when it changes. This can be a good tradeoff especially when fast random access to historic backups is needed. This combined with snapshots is what I would most likely use for backing up production servers, where getting back some (or all) files of a specific historic version as fast as possible is usually what is needed. For my desktop however incremental backups are more of a backup of a backup. Fast access is not needed but I want to have the history around just in case I get the order of the -iname and -delete arguments to find wrong again without noticing.

Duplicity backs up your data by producting compressed (and optionally encrypted) tars that contain diffs of a full backup. This allows it to work with dumb storage (like s3) and makes encrypted backups relatively easy. However if even just a few bits get corrupted any backups after the corruption can be unreadable. This can be somewhat mitigated by doing frequent full backups, but that takes up space and increases the time needed to transfer backups.

rdiff-backup works the other way around. It always stores the most recent version of your data as a full mirror. So you can just cp that one file you need in a pinch. Increments are stored as 'reverse diffs' from the most current version. So if a diff is corrupted only historic data is affected. Corruption to a file will only corrupt that file, which is what I prefer.

The Script

Most backup scripts you find on the net are written for backing up servers or headless machines. For backing up Desktop Linux machines the most popular solution seems to be deja-dup which is a frontend for duplicity.

As I want to use rdiff-backup I hacked together my own script. Here is what it roughly does.

- Mounts backup device by label via udisks

- Communicates start of backup via desktop notifications using notify-send

- Runs backup via rdiff-backup

- Deletes old increments after 8 weeks

- Communicates errors or success via desktop notifications.

#!/bin/bash

BACKUP_DEV_LABEL="backup0"

BACKUP_DEV="/dev/disk/by-label/$BACKUP_DEV_LABEL"

BACKUP_DEST="/media/$USER/$BACKUP_DEV_LABEL/fortress-home"

BACKUP_LOG="$HOME/.local/tmp/backup.log"

BACKUP_LOG_ERROR="$HOME/.local/tmp/backup.err.log"

# delay backup a bit after the login

sleep 3600

# unmount if already mounted, ensures it's always properly mounted in /media

udisksctl unmount -b $BACKUP_DEV

# Mounting disks via udisks, this doesn't require root

udisksctl mount -b $BACKUP_DEV 2> $BACKUP_LOG_ERROR > $BACKUP_LOG

notify-send -i document-save Backup Started

rdiff-backup --print-statistics --exclude /home/jonas/Private --exclude MY_OTHER_EXCLUDES $HOME $BACKUP_DEST 2>> $BACKUP_LOG_ERROR >> $BACKUP_LOG

if [ $? != 0 ]; then

{

echo "BACKUP FAILED!"

# notification

MSG=$(tail -n 5 $BACKUP_LOG_ERROR)

notify-send -u critical -i error "Backup Failed" "$MSG"

# dialog

notify-send -u critical -t 0 -i error "Backup Failed" "$MSG"

exit 1

} fi

rdiff-backup --remove-older-than 8W $BACKUP_DEST

udisks --unmount $BACKUP_DEV

STATS=$(cat $BACKUP_LOG|grep '^Errors\|^ElapsedTime\|^TotalDestinationSizeChange')

notify-send -t 1000 -i document-save "Backup Complete" "$STATS"

This script runs whenever I login. I added it via the Startup Applications settings in Ubuntu.

The backup ignores the ecryptfs Private folder but does include the encrypted .Private folder thereby only backing up the cipher texts of sensitive files.

I like using disk labels for my drives. The disk label can easily be set using e2label:

e2label /dev/sdc backup0The offsite backup I do by manually mounting the LUKS encrypted disk and running a simple rsync script. I might migrate this to amazon glacier at some point.

I hope this post is useful to someone including future me. ;)

smartcrop.js ken burns effect 9 May 2014 3:00 AM (11 years ago)

This is an experiment that multiple people have suggested to me after I have shown them smartcrop.js. The idea is to let smartcrop pick the start and end viewports for the ken burns effect.. This could be useful to automatically create slide shows from a bunch of photos. Given that smartcrop.js is designed for a different task it does work quite well. But see for yourself.

I'm sure it could be much improved by actually trying to zoom on the center of interest rather than just having it in frame. The actual animation was implemented using css transforms and transitions. If you want to have a look you can find the source code on github.

Introducing smartcrop.js 3 Apr 2014 2:00 PM (11 years ago)

Image cropping is a common task in many web applications. Usually just cutting out the center of the image works out ok. It's often a compromise and sometimes it fails miserably.

Can we do better than that? I wanted to try.

Smartcrop.js is the result of my experiments with content aware image cropping. It uses fairly simple image processing and a few rules to attempt to create better crops of images.

This library is still in it's infancy but the early results look promising. So true to the open source mantra of release early, release often, I'm releasing version 0.0.0 of smartcrop.js.

Source Code: github.com/jwagner/smartcrop.js

Examples: test suite with over 100 images and test bed to test your own images.

Command line interface: github.com/jwagner/smartcrop-cli

2D Wingsuit Game - first preview 31 Oct 2013 2:00 PM (11 years ago)

I'm not dead. I'm working on a 2D wingsuit game based on hand drawn levels. It's going to be cool. :) What do you think?

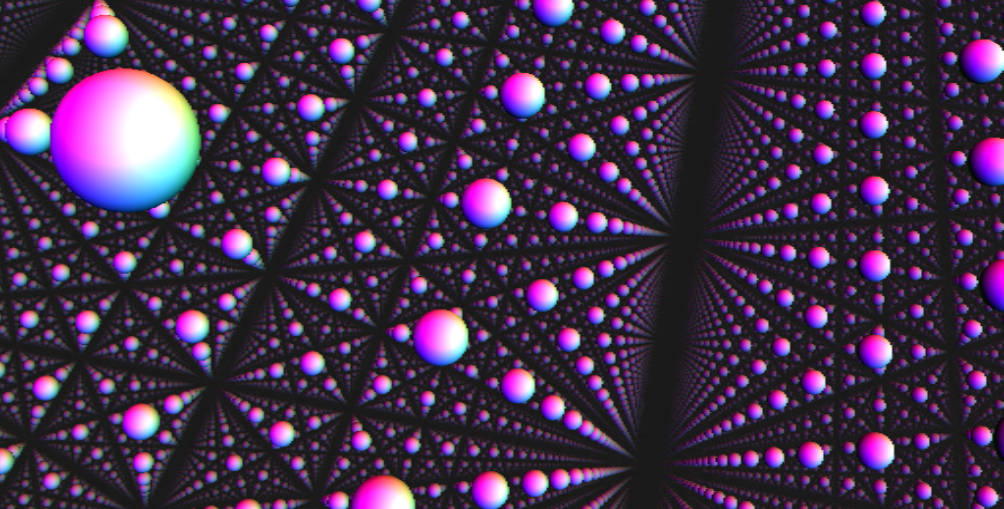

Wild WebGL Raymarching 25 Sep 2013 11:48 AM (12 years ago)

It's been way too long since I've released a demo. So the time was ripe to have some fun again. This time I looked into raymarching distance fields. I've found that I got some wild results by limiting the amount of samples taken along the rays.

Demo

Behind the scenes

If you are interested in the details, view the source. If left it unminified for you. The interesting stuff is mainly in the fragment shader.

Essentially the scene is just an infinite number of spheres arranged in a grid. If it is properly sampled it looks pretty boring:

Yes, I do love functions gone wild and glitchy. :)

New Website 28 Aug 2013 2:48 PM (12 years ago)

Updating this website has been long overdue. It has been running on zine, a blog system that's no longer maintained since 2009, for way too long. After looking for a replacement and not finding anything I liked I decided that it would be fun to write my own. ;)

I tried hard not to break any existing content. If something is not working anymore, let me know.

A few details about the system

My new website is maintained by a static website generator and server using nginx. This has the benefit of speed and trivial deployments via rsync. Comments are now handled with disqus.